ORIGINAL ARTICLE

CHAGAS, Edgar Thiago de Oliveira [1]

CHAGAS, Edgar Thiago de Oliveira. Artificial intelligence, quantum computation, robotics and Blockchain. What is the reality of these technologies in current and future times?. Revista Científica Multidisciplinar Núcleo do Conhecimento. 04 year, Ed. 06, Vol. 09, pp. 72-95. June 2019. ISSN: 2448-0959

SUMMARY

In areas such as artificial intelligence, it is necessary to use other types of computers and architectures. For example, in image recognition or speech processing algorithms, the sequential execution and storage of Von Neumann architecture (very effective for other applications) becomes a limitation that limits the performance of these systems. In this premise, the present work sought to analyze the artificial intelligence, quantum computation, robotics and blockchain, so as to reflect on the reality of these technologies in current and future times.

Keywords: artificial Intelligence, quantum computation, robotics, Blockchain.

1. INTRODUCTION

This article aims to reflect on concepts related to classical computation and quantum computation. In this way, the work proposes to analyze the current computers as well as their limitations and perceptions about the future. The basic concepts of von Neumann architecture and quantum computation will be introduced for such analysis. The objective is to discuss the meaning of these concepts focused on computer science. In this sense, the research describes the history of the development of microprocessors from an overview of quantum computation. The emphasis focuses on distinguishing between the main features of traditional computing and quantum computation. Finally, we propose a reflection on the main technical difficulties encountered in the construction of quantum computers as well as in some researches in this field.

2. CLASSIC COMPUTING

Today, computers perform a variety of activities that require considerable processing and execution time. The search for reducing the execution time of these activities led researchers to develop machines more and more quickly. However, there will come a time when physical boundaries will prevent the creation of faster devices. In this context, the laws of physics impose a limit on the miniaturization of circuits. In this way, in the future, the transistors will be so small that the silicon components will be almost molecular in size. At microscopic distances, the laws of quantum mechanics have an effect, causing electrons to jump from one point to another without crossing the space between them, causing many problems.

Current computers are based on the architecture of Von Neumann. He was a Hungarian mathematician of Jewish origin, but naturalized as an American. Von Neumann contributed to the development of joint theory, functional analysis, Erodic theory, quantum mechanics, Computer science, economics, game theory, numerical analysis, hydrodynamics of explosions, Statistics and other various areas of mathematics. He is considered one of the most important mathematicians of the TWENTIETH century. A computer based on the Von Neumann architecture clearly distinguishes the information processing and storage elements, that is, it has a processor and a memory separated by a communication bus. In von Neumann’s computer, two aspects emerged: the organization of memory and treatment.

Modern computers have the same standards as they are based on the architecture of Von Neumann. This solution divides the computer hardware into three main components: memory, CPU, and input and output devices. Memory Stores program instructions and data; The CPU is responsible for extracting instructions and data from memory, executing them, and then storing the values obtained in memory and input devices (such as keyboard, mouse and microphone) and output devices (such as monitor, speakers and printer) Allow user interaction, displaying, for both, data and instructions related to the processing of results. Words stored in memory can contain statements and data. In turn, processing is sequential and may include conditional or unconditional deviations.

The presence of program counters (incremented by each statement) and main memory (including executable programs and their data files) reflects these characteristics. These are two of the most important features of the Von Neumann architecture, as they not only define the computer itself, but also all associated content from complex algorithms to solve some of the efficiency problems. To further illustrate the importance of these characteristics of Von Neumann’s architecture, consider the following example. When a programmer develops software, he writes an algorithm (a set of instructions) to solve the problem.

The way most programmers design and implement this solution is sequential, not just because people think in order, but because computers built and used 50 years ago work in sequence. Programming (structural, logical, or functional) and sequential processing are direct consequences of Von Neumann’s architecture. Even new programming paradigms, such as object orientation, are still limited to these concepts. Despite some limitations, this way of organizing computers is very effective for most of the activities performed by modern computers. There may be no better way to do mathematical calculations, edit text, store data, or access the Internet, because von Neumann computers are the best machine for these tasks.

However, for specific areas of development, such as encryption, a new computer tool may be needed as well as a new strategy for dealing with problems and thus finding solutions. The encryption programs currently work effectively because they are not suitable for decomposition in large quantities, thus possessing more than a few hundred and more than the capacity of the most modern machines. The difficulty of decomposing a large quantity cannot be attributed only to materials. This can be caused by aspects related to software and hardware.

It cannot be said that the task of the current machine to accomplish this nature is inefficient because the current computer does not have sufficient power or processing speed. The problem may be the lack of knowledge (or creativity) in the design of the necessary algorithms. Therefore, current processors may even be sufficient to solve the problem of massive decomposition, however, humans do not understand how to implement the algorithm. On the other hand, the lack of such an algorithm may be the result of a lack of a sufficiently powerful computer. The truth is that research is not decisive, since the source of the problem related to hardware, software or both is unknown.

2.1 AUTOMATION, THE WAY TO THE MODERN WORLD

In the capitalist system, globalization prevacts in the world’s labor markets, so automation functions as the basis for companies to remain competitive. This is already the reality of industries, because technology has become an essential element. The proposed theme is also related to the jobs generated in the face of automation being inversely proportional. Automation is the necessary investment for large, medium and small companies. Obsolete machines hinder production and end up being a preponderant factor for the withdrawal of many companies from the market. In this context, computers are responsible for streamlining bureaucratic services as well as transmitting accurate and fast information to companies as well as robots also expedite the market.

It is clear that technology has reduced the number of jobs, but has made consumers more demanding, as well as increased agility and competitiveness in trade. With this, the expenses of the companies declined and the profits increased. The employment bond generates burden for companies with taxes paid under employees, being the machines investments of high cost, but that cease or decrease expenses with these and bring, quickly, the return of invested capital. Workers should always be recycling and perfecting themselves through courses and trainings, because the labor market is increasingly competitive, so those who do not recycle themselves end up getting the margin of evolution.

It is growing, in the capitalist countries, the concern with the evolution that occurs in the fields of biology, of microelectronics, among others. Destarte, the concern reaches the modernist race, because there is a rapid overcoming of the machines; But it also has the object of workers who lose their work. It is often, in companies, the return of old employees to school banks. This demonstrates that companies are relying on skilled labor and thus gain dynamism and quality to compete.

2.2 INDUSTRIAL AUTOMATION

The history of industrial automation had its opportunity with the production of the assembly lines of automobile character. One of the most expressive examples is Henry Ford. This gained space in the market in the decade of 1920. Since this period, technological advancement has gained momentum in the most diverse areas interconnected to automation, thus having a significant increase in the quality and quantity of production, mainly prioritizing the cost reduction. In this context, the advancement of automation, in general, is linked to the evolution of microelectronics, which has gained more strength and space in recent years. An example of this process is the creation of the Programmable Logic controller (PLCS).

This tool emerged in the decade of 1960, with the objective of replacing the cabin panels, since they had more negative than positive characteristics, mainly because they occupied a very expressive physical space. Thus, from the moment it was necessary to make changes in the logical programming of the processes performed by electrical interconnections with fixed logic, it was required interruptions in the production process to the detriment of the need to reconnect the Panel control elements. This practice took a long time, so there was a huge loss in production as well as there was a high energy consumption.

In this context, in the year 1968, in the Hidramatic division of “General Motors Corporation”, an experiment focused on logic control was carried out. It was intended that the programming of resources linked to the software was linked to the use of peripheral devices, being able to perform input and output operations from a microcomputer with programming capacity. This experiment significantly reduced the automation costs of the time. Thus, with the creation of microprocessors, it was no longer necessary to use large computers. Thus, the PLC (name given to the experiment), became an isolated unit, receiving, for that, some resources.

They are the interface of programming and operation in an easy way to the user; Arithmetic instructions as well as data manipulation; Communication capabilities from PLC networks and new configuration possibilities specific to each purpose from interchangeable modules. In this sense, from a more integrated view of the factory floor with the corporate environment, all organizational decisions related to the production system began to be taken through the concepts voted on quality, based, In concrete and current data originated from different control units. In this way, the manufacturers of CLPs need to understand the basic inequality: software and hardware for the production of SCADA systems as well as for other specialized systems.

Thus, SCADA softwares take shape from different sizes and operating systems as well as have several functionalities. To be presented as complete tools, add to the SLP to compete. However, in the area of instrumentation, this revolution took more slowly, because it was necessary to equip the instruments of more intelligence as well as to make them communicate in a network, that is, so that the standard 4-20 mA could transmit analog signals, should Give their place to the digital transmission. This principle was developed from a protocol that took advantage of the existing wiring itself, thus transiting digital signals on the analog signals 4-20 mA. This protocol (HART) was no more than a palliative, although it remains until today in its interinity.

This phenomenon also represents a form of reaction to the advancement and existence of new technologies, because, afterwards, a vast number of patterns and protocols were created that attempted to show themselves as unique and better. In a current context, the Plcs are used, above all, for the implementation of sequential interlocking panels, for the control of meshes, for the manufacturing cell control systems, among others. They are also found in processes related to packaging, bottling, canning, transportation and handling of materials, machining, with power generation, in building control systems of air conditioning, in systems of Safety, automated assembly, paint lines and water treatment systems in the food, beverage, automotive, chemical, textiles, plastics, pulp and paper, pharmaceutical, steel and metallurgical industries.

3. HISTORY

In 1965, Gordon Moore, co-founder of Intel, one of the leading manufacturers of microprocessors, made a prediction that became famous being known as Moore’s law. According to him, the number of transistors of a microprocessor would bend at approximately constant time intervals between one and three years. This means an exponential advance in the evolution of machine processing. In this context, it was found that the law was valid from then until the present day, period in which it was observed that the processing power doubled, approximately, every 18 months.

3.1 THE PROMISE OF QUANTUM PROCESSORS

Quantum processors seem to be the future of computing. The current architecture, including the use of transistors to build processors, will inevitably reach its limits within a few years. Then it will be necessary to elaborate a more efficient architecture. Why not replace the transistor with an atom? Quantum processors have the potential to be efficient, and in seconds, current processors cannot even be completed in millions of years.

3.2. WHY QUANTUM PROCESSORS

In fact, the microprocessors designer did a significant job. In the last three decades, since the advent of Intel 4004, the processing speed of the world’s first microprocessor has grown expressively. To get an idea, the i8088 is the processor used in the XT released in 79, it has an estimated processing capacity of only 0.25 meglops, or only 250,000 operations per second. The Pentium 100 has processed 200 meglops and 200 million operations, while the 1.1 GHz Athlon processes nearly 10 gigaflops, 40,000 times faster than the 8088.

The problem is that all current processors have a common limitation: they consist of transistors. The solution to produce faster chips is to reduce the size of the transistors that produce them. The first transistors appeared in the decade of 1960, are approximately the size of a phosphor head, while the current transistors are only 0.18 microns (1 micron = 0.001 mm). However, it has approached the physical limits of the matter, so to move forward, you will have to replace the transistors for more efficient structures. In this way, the future of processors seems to depend on quantum processors.

3.3. MOORE’S LAW

One of the most well-known concepts of computation is approached by the so-called Moore law. In mid-1965, Gordon E. Moore, then president of Intel, predicted that the number of chip transistors would increase by 60%, at the same cost, every 18 months. According to Moore’s predictions, the speed of computers would double every year and a half. This law has been maintained since the publication of the first PC1 in 1981. The first microprocessor manufactured by Intel Corporation was the 4004. It had a 4-bit CPU, with “only” 2300 transistors. Its operating speed was 400 KHz (kilohertz, not megahertz). The manufacturing technology has reached a density of 10 microns. Made in 1971, it was the first microprocessor in a single chip, as well as the first available in the market. Originally designed to be a component of calculators, the 4004 was used in several ways.

Currently, Intel’s most advanced processor is the Core i7-3960X, with six real cores and twelve 3.3 GHz segments, up to 3.9 GHz, thanks to Turbo Boost technology. This processor has 2.27 billion transistors of 22 nanometers each, a thousand times smaller than the diameter of a hair strand; The chip is silicon. However, at some point in the future-even the experts do not know exactly when-the silicon border will be reached and the technology will not be able to advance. Therefore, chip manufacturers will have to migrate to another material. This day is still far away, but the researchers are already exploring alternatives. Graphene receives much attention as a potential successor to silicon, but OPEL Technologies believes that the future is in a compound called gallium arsenide, also called the molecular formula GaAs.

Even if this new technology reaches its limits and another technology succeeds, it will eventually reach the atomic boundary. At this level, it’s physically impossible to do fewer things. This requires the realization of a detailed analysis because it leads to one of the most important conclusions about numerical calculations. Since the advent of the first digital computer, there have been no major changes. What happened in the last 60 years was the development of technology. Smaller, faster computers have arrived. The valve moves to the transistor and finally moves to the microchip. In fact, this is an example related to the evolution of speed. However, the truth is that a more powerful computer has never been installed.

In this context, all current computers are XT (XT is one of IBM’s first personal computers, equipped with the name of the 8086 chip, the original IBM PC is accompanied by a cheap version of the 8088, 8086). IT developed in terms of speed, but not in terms of computing power. Inevitably, digital technology will reach its limits sooner or later. From a physical standpoint, it is impossible to increase the speed of the processor. This will require changing the computer itself or discovering new technologies. To this end, it is necessary to modify the architecture of Von Neumann. Architectural changes involve reorganizing computer components to improve device functionality.

In this way, some of the limitations implied by Von Neumann’s architecture will be removed. Several unconventional computing solutions are being studied today. Some even involve the use of DNA molecules (deoxyribonucleic acid). Among the various alternatives to the digital computers under study, the most interesting and promising are the quantum computers. The next section of this article discusses the development of quantum knowledge in this area of human knowledge, thus, will be described the basic concepts related to generation, evolution, scope, future perspectives and challenges inherent to computing of quantum character.

4. QUANTUM COMPUTATION

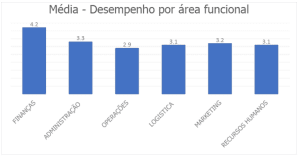

Quantum computers basically operate from rules related to quantum uncertainty. When it reaches the level of a single particle, nothing is absolute (the electron can be rotated one way or another, but a mix of spins can also be present). On quantum computers, the basic unit of information is quantum bits (qubits). Qubits can have values of 0 or 1 as well as regular bits (binary numbers). The difference is that the qubits can have values 0 and 1 at the same time. It is in this particular property that there is all the computational power of quantum computers.

Figure 1: Quantum computation

Quantum computation explores the advantages of coherent quantum state superposition to achieve quantum parallelism: simultaneously, achieving the same operation at different qubits values. This is possible only because of the fundamental difference between qubits (qubits are known) and classics: While classic bits can be only in state 0 or 1, the qubits can be overlapped in these states 0 and 1. When there are two qubits, the qubits can exist as a combination of all possible two digits. By adding a third qubit, all three possible bit numbers can be combined. The system has grown exponentially. Thus, a collection of qubits can represent a line of numbers and quantum computers, at the same time, can process all data entries simultaneously.

The concept of quantum computation is not as complex as the basic measurement unit is the classic computer. This can take the values 0 or 1 bit. It’s this particularity that makes quantum structures so powerful. The predecessor described is quantum spin, but an automaton is also proposed to move the quantum structure. The basic unit of this type of computer is composed of four quantum cells of quantum dots, so each unit has two electrons that seek to remain stationary. In other words, in the state that consumes less energy, the Coulomb formula indicates that the repulsive force is inversely proportional to the distance of the repulsion mechanism, so that the closer the force the more likely to repel. Therefore, they tend to occupy quantum dots at both ends of the cell, which equates to rotating on a computer with quantum rotation. However, this technique requires a very low operating temperature: 70 mg.

The quantum cell is used to create a structure formed by a quantum from 5 cells: Three input cells, one cell “manipulation” and one output. This structure is called tree input. The operation of the filament structure in basic form is as follows: The repulsion of electrons maintains at least the polarization, so that the treated cells are induced to assume the state of most input units. Finally, the output unit copies the result so that the polarization of the unit does not affect the calculation of the processing unit.

But there are still some problems, not in terms of architecture, but in the process of constructing quantum computers, because one of the reasons for the error is the environment itself: the impact on the environment can cause changes in the qubits, which can cause Inconsistencies in the system, invalid. Another difficulty is that quantum physics points out that it is necessary to measure or observe the superposition of the behavioral failure states of a quantum system. That is, if you read the data while running the program on a quantum computer, all processing will be lost.

4.1. THE SHOR ALGORITHM

The development of quantum computation began in the decade of 1950, when the laws of physics and quantum mechanics applied to computer science were considered. In 1981, a meeting was held in Massachusetts at the Institute of Technology: MIT discussed this issue. In this meeting, physicist Richard Feynman proposed the use of quantum systems on computers, defending that they have more processing power than ordinary computers. In 1985, David Deutsch, from Oxford University, described the first quantum computer. It’s a quantum Turing machine that can simulate another quantum computer. There was no significant progress in research on the subject, so almost a decade later, Peter Shaw, an AT & T researcher, conducted a 1994 study to develop an algorithm to factor much faster than traditional computers.

The algorithm uses the properties of a quantum computer to perform the decomposition of large integers (approximately 10 200 numbers) in polynomial time. This algorithm, called the Shor algorithm, is published in the article “Quantum Computation algorithms: discrete logarithms decomposition”. The algorithm uses the quantum superposition property to reduce the complexity of the solution time problem from the exponential to the polynomial by means of a specific quantum function. Understanding the quantum functions used in the Shor algorithm requires a fairly extensive and complex mathematical interpretation that is beyond the scope of this article.

The direct application of the Shor algorithm is applicable to the field of encryption. The security of public key cryptographic systems depends on the difficulty of decomposing very large numbers. The security of the encryption system is compromised by the actual implementation of a computer capable of performing these calculations quickly. The U.S. Space Agency (NASA) and Google are investing in quantum computing for the future of computing and are preparing to use the latest D-Wave machines.

4.2 THE QUANTUM COMPUTER OF D-WAVE

The new version of D-Wave, quantum computer will help in the NASA search for the extraterrestrial world before the end of 2013 acts mainly to improve the service. D-Wave TWO Computer-1 512-bit computers-will soon be put into use in the new Quantum Artificial Intelligence Laboratory created by NASA, Google and USRA (Space University Research Association). Engineering Director Harmut Neven Google posted a note on the company’s blog describing the objectives of the Organization. He believes that quantum computation can help solve computer science issues in some, especially in the field of artificial intelligence. Artificial intelligence is built from the best models in the world to make more accurate predictions.

To cure the disease, it is necessary to establish a more effective model of diseases as well as in order to develop a more effective environmental policy, it is necessary to develop better global climate models. To build a more useful search service, you need to better understand the issues and content available on the Internet in order to get the best answer. Neven, according to the communiqué, reports that the new laboratory will transform these theory ideas into practice. In this way, the installation work of the D-Wave machine started at NASA at Ames Research Center, Moffett field in California. Google’s headquarters in Mountain View, staying a few minutes away.

This partnership represents D-Wave’s latest breakthrough. The D-Wave is a claim, for both, was built and sold the world’s first commercial quantum computer by the Canadian company. Many academic labs are working to build only a small qubit quantum computer number, the researchers involved with D-Wave, are working so that the machine can leave less doubt. A few years ago, many known quantum computing specialists were skeptical about IEEE Spectrum. Actually, D-Wave managed to conquer some ex-comentaristas. The company allows D-Wave to access independent researchers. In at least two cases, this openness has led to implications for allegations of quantum computation and corporate performance. D-Wave gained greater credibility when it first commercialized Lockheed Martin in 2011.

According to the Google representative, the new Quantum Artificial Intelligence Lab submitted the new D-Wave Two to rigorous testing before the machine was approved. In particular, a test requires that the computer resolve some optimization issues, at least 10,000 times faster than the classic computer. In another case, the D-Wave machine obtained the highest score in the Standard edition of the SAT game. For years, Google has used D-Wave hardware to solve machine learning problems. The company has developed a compact and efficient machine learning algorithm for pattern recognition-useful for power-limiting devices such as smartphones or tablets. Another similar machine proved to be very suitable when, for example, a high percentage of images in online albums was sorted incorrectly to solve the “pollution” of the data.

Nasa, meanwhile, hopes that technology can help accelerate the search for distant planets around the solar system as well as support future space, human or robotic mission control centers. Researchers at NASA and Google will not monopobe the use of the new D-Wave Twono lab machine. USRA intends to provide the system to the American academic research community-a decision that will help D-Wave overcome the skeptics. The news was made by the airline Lockheed Martin. Thus, for US $1 billion, at the beginning of 2013, announced the purchase of the D-wave two shortly afterwards, representing the upgrading, subsequently, to the machine D-Wave One.

4.3 TEST: DIGITAL COMPUTER X QUANTUM COMPUTER

For the first time, a quantum computer was put to compete with a common PC-and the quantum computer won the dispute with Slack. The term quantum computation is always associated with the idea of “in the Future”, “when they become reality”, “if they can be built” and things like that. This perception began to be changed in 2007, when the Canadian company D-Wave, presented a computer that she claimed to make calculations based on quantum mechanics. The skepticism among researchers and scholars of the theme was great. However, in 2011, the scientific community had access to the equipment and could atmore that the quantum processor of D-Wave is really quantum. Now, Catherine McGeoch of Amherst University in the United States was hired by D-Wave to make a comparison between D-Wave that uses “adiabatic quantum computation” and a common PC. According to the researcher, this is the first study to make a direct comparison between the two computing platforms: according to her: “This is not the last word, but rather a beginning in an attempt to find out what the quantum processor can or cannot do.”

The quantum processor, formed by 439 qubits of niobium coils, was 3,600 times faster than the common PC in the execution of calculations involving a combinatorial optimization problem, which is to minimize the solution of an equation by choosing the values of certain variables. Calculations of this nature are widely used in the algorithms that make image recognition, machine vision and artificial intelligence. The quantum computer of D-Wave found the best solution in half a second, approximately, while the most efficient classic computer needed half an hour to reach the same result.

The researcher recognizes that it was not a completely clean game, since generic computers, as a rule, do not do so well against processors dedicated to solving a specific type of problem. So, according to her, the next step of the comparative will be to make the dispute between the adiabatic quantum processor and a classic processor developed for this type of calculation – such as a GPU used on graphics cards. The researcher highlighted the specialized character of the quantum computer, pointing out that it is excellent for performing calculations. It states that this type of computer is not intended to surf the internet, but solves this specific but important type of problem very fast.

4.4 TECHNOLOGIES

Data paths to possible applications for quantum information processing, how can you run them on real physical systems? On a scale of a few qubits, there are many work proposals for quantum information processing devices. Perhaps the easiest way to reach them is from optical techniques, that is, from electromagnetic radiation. Simple devices, such as mirrors and beam dividers, can be used to perform elemental manipulations in photons. However, researchers have found that it is very difficult to produce separate photons successively. For this reason, they opted to use schemes that produce individual photons “from time to time,” randomly.

The experiments of quantum cryptography, Superdense coding and quantum teleportation were performed using optical techniques. One advantage of these techniques is that photons tend to be very stable carriers of information from quantum mechanics. One drawback is that photons do not interact directly with each other. The interaction should be mediated by another element, as from an atom, since it introduces additional complications that cause noise to the experiment. An effective interaction between the two photons occurs as follows: The number of photon interacts with the atom, which, in turn, interacts with the second photon, causing complete interaction between the two photons.

An alternate scheme is based on methods that capture different types of atoms: there is the Ion trap, in which a small number of charged atoms are trapped in a confined space; And neutral ionic traps are used to capture unladen atoms in this confined space. Quantum information processing schemes based on atom traps use atoms to store qubits. Electromagnetic radiation also appears in these schemes (but differently from that reported in the “optical” approach of quantum information processing). In these schemes, photons are used to manipulate information stored in atoms. Unique quantum doors can be performed by applying appropriate electromagnetic radiation pulses to individual atoms.

Neighboring atoms can interact with each other through, for example, dipolar forces that allow the execution of quantum doors. In addition, it is possible to modify the exact nature of the interaction between the neighboring atoms, applying appropriate electromagnetic radiation pulses to the atoms, which allows the experimenter to determine the ports executed in the system. Finally, quantum measurement can be performed on these systems using the quantum leap technique, which accurately implements measurements based on the computation used in quantum computation. Another class of quantum information processing systems is based on nuclear magnetic resonance, known under the initials of the English term NMR. These schemes store quantum information in the nuclear spins of atomic molecules and manipulate this information with the help of electromagnetic radiation.

The processing of quantum information by NMR faces three particular difficulties that make this technology different from other quantum information processing systems. First, the molecules are prepared leaving them in equilibrium at room temperature, which is much higher than the energies of the spin rotation that the spins become almost completely randomly oriented. This fact makes the initial state particularly “noisy” than desirable to process quantum information. A second problem is that the class of measurements that can be used in NMR does not include all the measures necessary to perform the processing of quantum information. However, in many cases of quantum information processing, the class of measurements allowed in NMR is sufficient.

Thirdly, since molecules cannot be treated individually in NMR, it is natural to imagine how individual qubits can be treated appropriately. However, different cores in the molecule may have diverse properties that allow them to be approached individually-or at least to be processed on a sufficiently granular scale to enable the essential operations of computing Quantum. The IBM Almaden Research Center has produced excellent results: a seven-atom quantum machine and NRM were successfully built and correctly executed the Shor algorithm, with Factor 15. This computer used five fluorine atoms and two carbon atoms.

4.5 PROBLEMS

The main difficulty encountered in constructing quantum computers is the high incidence of errors. One of the reasons for defining the error itself is that the influence of support on the quantum computer can lead to a variant of the qubit. By disabling all calculations, these errors can cause system inconsistencies. Thus, another difficulty is the meaning of quantum mechanics, the principle that makes quantum computers so interesting. Quantum physics claims that measuring or observing a quantum system can destroy the superposition of states. This means that if your program is reading data while running on a quantum computer, all processing will be lost.

The biggest difficulty is the ability to correct errors without actually measuring the system. This is achieved by phase consistency. This technology can fix errors without damaging the system. To do this, magnetic resonance imaging is used to replicate a single bit of phonetronic quantum information from a trinuclear trichloroethylene molecule. Basically, this technique uses indirect observations to perform inconsistencies and maintain system consistency. Given all these difficulties, the importance of IBM’s experience is obvious: Scientists can overcome all these setbacks and put them into practice from the Shore algorithm on quantum computers.

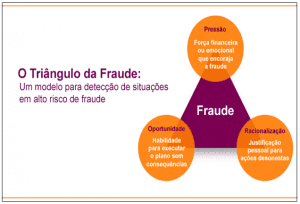

5. BLOCKCHAIN

The blockchain, or “block chain”, is a kind of decentralized database contained in specific software that works mainly to verify the authenticity of bitcoins and transactions, where all activities are recorded Made with Bitcoin currencies, so that it is possible to verify the integrity of the currency and prevent counterfeiting. Basically, when a transaction is performed, the information generated in it is added to the blockchain, functioning as a record. When a transaction needs to be verified, the customers responsible for verifying scan the content of the transactions related to the Bitcoin involved, so that in addition to counterfeiting, the “double-expense” of the same currency is prevented.

The timestamp servers serve to ensure that a certain information exists or existed in a valid time, to ensure that, subsequently, the operations that depend on it are authentic. The problem with this verification in bitcoins is that there is no centralized server that can make these checks and assure customers the integrity of the information, so that this verification is done, the proof-of-work technique was adopted. It is a technique that validates a certain amount of information, and may require a lot of processing time to be validated, depending on your complexity.

Bitcoin uses this technique to validate the generation of new blocks, based on the Hashcash system. Thus, in order for a block to be successfully generated, a piece of its code is published on the network, so that other blocks validate and accept it, thus becoming a valid block.

6. TYPES OF PROCESSORS

Because of the so-called nitrogen vacuum structure, the diamonds were influenced by several teams of scientists who built quantum processors. Now, an international research team has proven that it is not only possible to build a quantum computer diamond, but also protect it from the results. In this context, consistency is a type of noise or interference that disturbs the subtle relationship between the qubits. When it enters, the particles are in point A and Point B at the same time, suddenly starts at point A or just in point B. Thus, another team created a quantum solid state processor using semiconductor materials. Because gas and liquid-based systems represent the vast majority of quantum computer experiments today, quantum processor solids have the advantage of being able to grow in quantum numbers without substantial complications.

The quantum diamond is as simple as possible: it has two qubits. Despite the full carbon, all diamonds contain impurities, that is, atoms that are “lost” in their atomic structure. It is these impurities that have aroused interest in scientists who think the area of quantum computation. The first is the nitrogen core, while the second is a single “wandering” electron, due to another defect in the structure of the diamond-in fact, the bit is the spin of each. Electronics works better as bits than cores because they can perform calculations more quickly. On the other hand, they are the most frequent victims of coherence.

6.1 APPLICATIONS

From the energy of the processor, this treatment would be very useful for scientific research, so it will, of course, be launched in these supercomputers the commercial application of virtual reality and artificial intelligence, as it shall become A fashion of this century. The game can really be enough to contain some characters that interact with the played. You can talk and act on the basis of the player’s actions, such as an almost real-time RPG. A single quantum computer can control hundreds of these characters in real time. The recognition of sounds and gestures is trivial. There is no doubt that there has been expressive progress in various fields that think artificial intelligence.

With the advancement of research, there will be a secure encryption code for the use of intelligent algorithms for large database surveys as well as for the realization of artificial intelligence tracking almost immediately, and yet for the Data transfer in a fast way. The use of fiber optics and high density terabytes per second and quantum mechanics routers can process this information. Transforming the Internet into a virtual world is enough, because in a virtual world people can integrate with the avatars and relate to speech, gestures and even touch, as in the real world. This will be the evolution of the ongoing discussions. The most important question is when. No one really knows how fast this research is. It may take a hundred years to see the application running or just twenty or thirty years.

6.2 HOW THEY WORK

The first quantum computer became a reality, for example, IBM launched its first quantum chip in the 12th session of the University of Palo Alto this year. It is still a very basic project with only five qubits, only 215 Hz as well as requires many equipment to work, however, it has been shown that it is a quantum processor that is currently possible. The first question of this experiment is how to keep this molecule stable. The solution currently in use is to store it in a high temperature cooling solution near absolute zero. However, this system is very expensive. To be commercially viable, they must overcome this limitation by creating quantum processors that can operate at room temperature.

The second problem is how to manipulate the atoms that make up the processor. An atom can change state at an alarming rate, but an atom cannot guess the state it wants to take. To manipulate atoms, use smaller particles. The solution discovered by IBM designers is to use radiation in a system similar to magnetic resonance, but more accurately. This system has two problems, the first is the fact that it is very expensive: a device, like a sheet, is not less than US $5 million. The second problem is that the technique is very slow, which proves the fact that the prototype of the IBM wheel is only 215 Hz, millions of times faster than any current processor, which is already in the Gigahertz box. Another obstacle that Quantum has to overcome is commercial viability.

6.3 NEW HOPES

As reiterated, the experimental quantum processors developed so far are slow, since they have normal emerging technologies as well as require complex and costly equipment. It is not as easy to package as Intel or AMD processors and work at room temperature, supported by a simple cooler. The current quantum prototype uses the NMR device to manipulate the state of atoms and molecules to remain stable at temperatures close to absolute zero. Although it helps scientists study quantum mechanics, such systems are never economically viable. Today, the development of quantum processors is gaining momentum. The first experiment sought to prove that it is a very problematic electronic manipulation because the electrons, due to its low quality and exposure, are very sensitive to any external influence.

They then manipulate the nucleus, which greatly simplifies the process, because the nucleus is higher and the idea is relatively isolated from the external environment through the electron barrier around it. But that’s just part of the solution. Either way, you still need to develop a technique to manipulate the nucleus. The first group uses MRI, a very expensive technical prototype, but there are already people who are developing an easier way to do this. Scientists at the Los Alamos National Laboratory in the United States have published experiments using optics to manipulate protons. The idea of this new technology is that protons can be used in the form of waveforms (which interact with the atoms that compose the quantum system) and can be transported through an optical system.

With this new technology, protons have the function of manipulating the atoms that make up the quantum processor. Because it is a particle, a proton can be “thrown” against a qubit, altering its movement and impact. Similarly, protons can be emitted to jump to the qubits. Thus, the trajectory of the proton is altered. The large balcony can be used to recover this proton using a photodetector that detects protons in the waveform, not particles. By calculating the path of the proton, the data recorded in the bits can be retrieved.

A problem encountered during the experiment was that there were many errors in the system. For this, researchers are working on error-correcting algorithms that will make the system reliable. After all this work, the quantum computer will probably become more viable than expected. Fifteen years ago, quantum computers were considered only science fiction. Today, some prototypes are already in operation. The question now is when are these systems viable? The progress we see may be the answer.

CONCLUSION

To accomplish most mathematical calculations, edit text or browse the Internet, the best solution is to use the closest computer (based on the Von Neumann architecture). In fact, today’s processors are very effective in performing these tasks. However, in areas such as artificial intelligence, it is necessary to use other types of computers and architectures. For example, in image recognition or speech processing algorithms, the sequential execution and storage of Von Neumann architecture (very effective for other applications) becomes a limitation that limits the performance of these systems.

For this type of application, it is more interesting to have a computer with sufficient processing capacity to match to identify the form (the common principle of resolving these problems). Among the different alternatives, quantum computers are the most promising, precisely because quantum computation differs from von Neumann’s structure by having enormous parallel processing power.

Therefore, it can be concluded that quantum computers will be used to effectively address the problems solved by the classic computers. Quantum computation will be applied to problems where an effective solution has not been found, such as artificial intelligence and encryption. Quantum computation can completely solve the extremely complex problems of classic computing. However, the difficulty of managing these phenomena and mainly implementing evolutionary architecture leaves much uncertainty for the success of these machines. Large companies like IBM are investing in research in this area and are already creating the first prototypes. Algorithms to solve this new paradigm are already in development and even developing programming languages.

It remains to be known whether the problem can be solved, whether it is an investment problem and time or whether there is a physical limit that prevents the creation of a machine that can overcome the current machine. If this is possible, there may be a quantum coprocessor that, along with the silicon processor, constitutes a future computer that can more accurately predict time and refine the complex.

BIBLIOGRAPHICAL REFERENCES

ACM Computing Surveys, v. 32, n. 3, p. 300-335, 2000.

BECKET, P J. A. Para a arquitetura dos nanomateriais. In: 7ª Conferência Ásia-Pacífico sobre Arquitetura de Sistemas de Computação, Melbourne, Austrália – Conferência sobre Pesquisa e Prática em Tecnologia da Informação, 2002.

BONSOR, K; STRICKLAND, J. Como funcionam os computadores quânticos. Disponível em: http://informatica.hsw.uol.com.br/computadores-quanticos2.htm. Acesso em: 9 mai 2019.

CHACOS, B. Além da Lei de Moore: Como Fabricantes Estão Levando os Chips ao Próximo Nível. Disponível em http://pcworld.uol.com.br/noticias/2013/04/17/alem-da-lei-de-moore-como-fabricantes-estao-levando-os-chips-ao-proximo-nivel/. Acesso em: 10 mai 2019.

FREIVALDS, R. Como simular um livre arbítrio em um dispositivo de computação? AMC, 1999.

GERSHENFELD, N; WEST, J. O computador quântico. Scientific America, 2000.

HSU, J. Google e NASA estarão usando um novo computador D-Wave. Disponível em http://itweb.com.br/107695/google-e-nasa-to-use-new-d-wave/computer. Acesso em: 10 mai 2019.

KNILL, E. Quantum aleatório e não determinístico. Laboratório Nacional Los Alamos, 1996.

MELO, B. L. M; CHRISTOFOLETTI, T. V. D. Computação Quântica – Estado da arte. Disponível em http://www.inf.ufsc.br/~barreto/trabaluno/TCBrunoTulio.pdf . Acesso em: 10 mai 2019.

NAUVAX, P. Arquiteturas de computador especiais. Disponível em http://www.dct.ufms.br/~marco/cquantica/cquantica.pdf . Acesso em: 10 mai 2019.

NIEMIER, M.T; KOGGE, P. M. Exploração e exploração de dutos de nível de cabo Tecnologias emergentes. IEEE, 2001.

RESENDED, A. M. P; JÚNIOR, A. T. da. C. Projeto de Pesquisa PIBIC/CNPq. Disponível em http://www.ic.unicamp.br/~rocha/sci/qc/src/corpoProjeto.pdf . Acesso em: 11 mai 2019.

RIEFFEL, E; WOLFGANG, P. Introdução à computação quântica para não-físicos.

SARKAR, P. Uma breve história de autômatos celulares. ACM Computing Surveys, v. 32, n. 1, 2000.

SKADRON, K. O Papel da Arquitetura de Processador em Ciência da Computação. Comitê sobre os Fundamentos da Computação. Academia Nacional de Ciências, 2001.

WIKILIVROS. Breve introdução à computação quântica. Disponível em http://wikibooks.org/wiki/Breed_introduction_quantum_computation/Print . Acesso em: 10 mai 2019.

[1] Bachelor of Business Administration.

Submitted: May, 2019.

Approved: June, 2019.