ORIGINAL ARTICLE

BONATO, Isabelle Emy [1], GRANADO, Karina [2]

BONATO, Isabelle Emy. GRANADO, Karina. The responsibility of electoral justice in the face of electoral advertisements on digital platforms. Revista Científica Multidisciplinar Núcleo do Conhecimento. Year 05, Ed. 03, Vol. 02, pp. 85-120. March 2020. ISSN: 2448-0959, Access link: https://www.nucleodoconhecimento.com.br/law/election-advertisements

SUMMARY

The study questions the reliability of digital social media platforms in election advertisements and the responsibility of electoral justice in this context. It analyzes the need for Electoral Justice to regulate the use of these platforms and asks whether the use of social networks as an electoral propaganda platform hurts democracy. It is notorious in media outlets around the world that there are digital means of obtaining personal information and directing information, true or not, to specific audiences. Thus, the use of social media as an electoral advertising platform may not be safe, and can be easily manipulated and, therefore, the present study analyzes whether the Superior Electoral Court should regulate the use of it observing the Principle of Smoothness of Elections that guarantees the free formation of the voter’s will and equality between candidates, ensuring brazilian democracy.

Keywords: Democracy, social media, elections.

1. INTRODUCTION

Recently, much has been said about the importance of democracy. Some speak of “coups” and take to the streets calling for the defense of democracy, others believe that there is nothing to fear, since with the presidential elections of 2018, the democratic process remained intact. However, a new issue was raised: the reliability of the use of digital platforms in electoral advertisements that was authorized by the TSE – Superior Electoral Court for these elections. When it comes to such an important issue as democracy, it is important to know the functioning of social networks and the existing methods of manipulating data and directing information to reflect on the freedom of voting and the defense and protection of democracy.

In March 2018, The New York Times published some of the Facebook scandals. Together with The Observer of London, the newspaper examined a political consulting firm called Cambrigde Analytica and revealed a potential new weapon used by the consulting firm. According to the American newspaper, this weapon is a software capable of mapping personality traits based on what people enjoy on Facebook. Cambridge had access to the personal data of millions of users of this network, being able to predict, among other personal characteristics, the receptive capacity of each, the political vision, IQ, personal satisfaction, whether the person is suspicious or not, whether it is open or not etc. This company was responsible for Donald Trump’s election campaign in 2016 and raised the issue of the security of personal data on social networks (ROSENBERG; CONFESSORE; CADWALLADR, 2018). In another report, we talk about the existence of bots[3] in the cyber world, capable of directing specific information to specific groups, influencing an Election (PATTERSON, 2018). With this information, there is growing concern about smoothness in elections around the world. In Brazil, for example, there was the scandal of the use of a device called “content boosting service” that would have been used by then presidential candidate Jair Bolsonaro in the dissemination of Fake News (PERON; MARTINS, 2018), another subject that will be explored in this work.

A principle inherent to the Democratic State of Law is the Principle of Electoral Lisura, defined by Ramayana (2015, p.31) as: “The guarantee of the smoothness of elections nourishes a special sense of protection of the fundamental rights of citizenship (citizen-voter), as well as finds a legal-constitutional foundation in the arts. 1, item II and 14 §9 of the Fundamental Law.” This constitutional principle aims to ensure the participation of the people in the choice of their representatives freely and truthfully. The Electoral Justice is responsible for enabling the expression of the will of the voters, operationalizing all electoral procedures so that they develop in harmony and transparency, without being hindered by the people’s sovereignty (VELLOSO; AGRA, 2016). Thus, it is extremely important to explore the means of data manipulation existing in current digital platforms so that it can reflect on the responsibility of the Electoral Justice in the use of social networks for political propaganda. To this end, recent articles will be studied that discuss various forms of data manipulation and their results in elections and how other countries are discussing this subject in order to reflect on the reliability of digital platforms for use in political advertisements.

The work will start from the exhibition of scientific articles and materials such as books and reports, which show the manipulation of personal data in the virtual world, in order to show the existence of mechanisms capable of directing specific information to a target audience with the potential to influence the result in elections, thus injuring the fundamental constitutional guarantee of the right to free choice of vote. Based on basic premises such as Democracy, the constitutional principles of Electoral Law and the functions of the Brazilian Electoral Justice, the new wording given to art. 57-C of Law No. 9,504/1997 (Wording given by Law No. 13,488, 2017) that allowed the use of digital platforms in election advertisements in the last elections, enabling reflection on the Responsibility of Electoral Justice in the use of digital platforms and the consequences for election campaigns and brazilian democracy.

2. DIGITAL PLATFORMS AND THEIR DANGERS

Digital platforms have emerged with the promise of bringing people together and promoting more “egalitarian ways to meet and discuss[qualquer assunto].” Online media has profoundly changed the way people communicate, debate and form opinions. Digital platforms are organized through complex cascading processes. Thus, the news spreads as “wild fire on social networks” through direct connections between readers and news producers, making it increasingly difficult to distinguish between them. However, this phenomenon has changed the way society composes its narratives about the common world (TÖRNBERG, 2018, p.1).

Peter Törnberg, from the department of sociology at the University of Amsterdam, explains:

Despite the initial optimism about this ostensibly decentralized and democratic meeting place, the online world seems less and less like a common table that brings us together to discuss and freely identify the problems of society in a new type of public sphere. Instead, it seems to bring the worst of human instincts: we group ourselves into tribes that comfort us with reaffirmation, and protect us from disagreements; are echo chambers[4] that reinforce the existing perspective and foster confirmation biases. The technology that was made to help further weave the bonds between human beings seems to have led to a wear and tear of our social fabric as we are divided into social groups with separate worldviews. (TÖRNBERG, 2018, p.1).

The internet has emerged as a source of easily accessible knowledge, but over time, the quality and credibility of information is drastically reducing. Increasingly, there is “an information climate characterized by prejudiced narratives, false news, conspiracy theories, mistrust and paranoia” (TÖRNBERG, 2018, p.2). The digital world has become a fertile ground for the growth of misinformation. Studies show that fake news spreads faster and farther than true news on social networks. According to Törnberg, this phenomenon can affect relevant aspects of a citizen’s life in all areas. Thus, this misinformation can even compromise the outcome of an election, being a risk not only to human society, as the author states, but a threat to a country’s democracy.

Apparently, there is a link between echo chambers and the spread of misinformation as these homogeneous groups of users, with a preference for self-confirmation, seem to provide greenhouses for the change of rumors and misinformation to spread. With a polarized digital space, users tend to promote their favorite narratives, forming polarized groups and resisting information that does not conform to their beliefs (TÖRNBERG, 2018, p.2). Thus forming armies of supporters of certain products or brands and even armies of presidents (or candidates to) who are quickly mobilized. This may mean that echo chambers make fake news more viral, since information that resonates with biased user groups is more likely to spread across a network (TÖRNBERG, 2018, p.3).

Törnberg’s study suggests in its conclusion that the simple grouping of users with the same worldview is sufficient to affect the virality of information. This suggests that it is not only the algorithms that filter the news, affecting them, but the fact that social media allows this dynamic of groupings, also changes the dynamics of virality. This is part of the big question of how social media has affected political and social processes (TÖRNBERG, 2018, p.16-17).

2.1 ARTIFICIAL INTELLIGENCE ON DIGITAL PLATFORMS

There are many ways of using artificial intelligence, among them two are more appropriate to this work, which seeks to analyze the reliability of platforms: software that identifies the user’s profile and bots that interact in the virtual world.

2.1.1 IDENTIFYING THE USER’S PROFILE

In the age of algorithm, Facebook itself and Google make selections by people, whether related to the choice of products, trips or presidential candidates. Algorithms know a lot about their users, and with the emergence of artificial intelligence, they tend to improve immensely. They learn and learn a lot about each one and will be, if they are no longer, able to know what each person wants or what they will want, better than themselves. They will even know their emotions and will be able to imitate human emotions on their own (SUNSTEIN, 2017, p. 13). Currently, an algorithm that learns a little about a person can find out and say what people like them tend to like. Robert Sunstein, professor at Harvard University’s Law School explains:

Actually, this is happening every day. If the algorithm knows that you like certain types of music, it can know, with high probability, what types of movies and books you like and which political candidates will please you. And if you know which websites you visit, you can know what products you’re likely to buy and what you think about climate change and immigration. A small example: Facebook probably knows your political convictions and can inform others, including candidates for public office. He categorizes his users as very conservative, conservative, moderate, liberal and very liberal (SUNSTEIN, 2017, p. 13).

Sunstein explains that Facebook categorizes its users politically by seeing the pages they like and states that it is easy to create a political profile when the user likes certain opinions, but not of others. If the user mentions some candidates favorably or unfavorably, categorization is even easier. And the scariest thing is that Facebook doesn’t hide what it’s doing. “On the Ad Preferences page on Facebook, you can search for ‘Interests’, then ‘More’, ‘Lifestyle and Culture,’ and finally ‘U.S. Politics’[5] and categorization will appear” (SUNSTEIN, 2017, p. 13). Machine learning can be used to produce refined distinctions, says the professor, such as user opinion on issues with public safety, the environment, immigration and equality. He adds: “To put it mildly, this information can be useful to others – campaign managers, advertisers, fundraisers and liars, including political extremists” (SUNSTEIN, 2017, p. 13).

In addition, the hashtags can be considered: #elenão, #partiu, #tbt, #lulalivre, #foratemer, #elesim #EuApoioaLavaJato. You can find a large number of items that interest the user or that fit (and even strengthen) their convictions. “The idea of the hashtag is to allow people to find tweets and information that interests them. It is a simple and fast classification mechanism” (SUNSTEIN, 2017, p. 14). There is a great performance of the so-called ‘entrepreneurs of the hashtag’; that create or disseminate hashtags as a way to promote ideas, perspectives, products, people, or supposed facts. This increases convenience, learning, and entertainment. After all, almost no one wants to see ads for products that don’t interest them. However, the architecture of this control has a serious disadvantage, raising fundamental questions about freedom and democracy (SUNSTEIN, 2017, p. 14). Of this, the professor questions what would be the social preconditions for individual freedom and for a democratic system to work well:

Could mere chance be important, even if users don’t want it? Could a perfectly controlled communications universe – a custom feed – be a kind of dystopia itself? How can social media, the explosion of communication options, machine learning and artificial intelligence change citizens’ ability to govern themselves? (SUNSTEIN, 2017, p. 14,).

Sunstein concludes that these issues are closely related and advocates a digital architecture of serendipity. Chance would prevent artificial intelligence from using users’ personal data to improve their online experience. By not obtaining this marketing-oriented information, there will be no personal data to be used to manipulate political interests. This measure would be “for the benefit of individual lives, group behaviors and democracy itself” (SUNSTEIN, 2017, p. 16). To the extent that providers are able to create something like custom experiences or closed communities for each user, or favorite topics and preferred groups, it is important that caution be exercised. “Self-insulation and personalization are solutions to some genuine problems, but they also disseminate falsehoods and promote polarization and fragmentation” (SUNSTEIN, 2017, p. 17). This is an important clarification when analyzing the nature of freedom, both personal and political, and the type of digital communication that best meets a democratic order.

2.1.2 INTERACTING WITH THE USER

In another study, conducted at the Center for Information and Communication Technology of the Bruno Kessler Foundation in Italy, it was analyzed, “on a microscopic level, how social interactions can lead to emerging global phenomena such as social segregation, dissemination of information and behavior” (STELLA; FERRARA; DOMENICO, 2018, p.1). The authors claim that digital systems are not only populated by humans, but also by bots, which are software-controlled agents for specific purposes ranging from sending automated messages to even taking on specific social or antisocial behaviors. Thus, bots can affect the structure and function of a social system (STELLA; FERRARA; DOMENICO, 2018, p.1)

But how can bots interact with users? Grassi Amaro, phD professor at FGV explains:

Robots are used on social networks to propagate false, malicious information, or generate an artificial debate. To do this, they need to have as many followers as possible (GRASSI et al., 2017, p.12) […]. In this way, robots add a large number of people at the same time and follow real pages of famous people, in addition to following and being followed by a large number of robots, so that they end up creating mixed communities – which include real and fake profiles (FERRARA et al., 2016 apud GRASSI et al., 2017, p.12).

Some bots have mechanisms to mimic human behavior, to be recognized as such, both by users and other bots. Others only aim to divert attention to a given theme (GRASSI et al., 2017, p.12). With machine learning, the system can identify humans and robots through profile behavior, such as taking into account “that real users spend more time on the network exchanging messages and visiting the content of other users, such as photos and videos, while robot accounts spend time researching profiles and sending friend requests” (GRASSI et al. , 2017, p.14).

Knowing each user’s profile and knowing how to interact with them, bots can be used to influence thoughts, actions and even initiate social and political movements. Thus it is important to understand the role of artificial intelligence in political affairs, and consequently in the democratic electoral process.

2.2 FAKE NEWS

Fake News has already become a known term in Brazil, however, it is important to highlight its definition and explanation. According to Ruediger and Grassi:

Fake News, or notícias falsas in Portuguese, has become a commonly adopted expression for identifying the disclosure of false information, especially on the internet. However, the evolutions of this phenomenon in networked society have shown that the term is not able to explain the complexity of its practices, becoming, numerous times, an instrument of a political discourse that benefits from such simplification. (RUEDIGER; GRASSI, 2018a, p.8)

In Brazil, fgv dapp’s study shows that bots “seek to create forged discussions and interfere in debates” by creating misinformation that can interfere in electoral and democratic processes. The study shows that the emergence of automated accounts allowed manipulation strategies through rumors and defamation. In addition to robots, cyborgs (partially automated accounts) (RUEDIGER were also identified; GRASSI, 2018, p.12).

Studies on the influence of Fake News in the U.S. election have indicated that one in four people has been exposed to false rumors. However, these news accounted for only 2.6% of the news universe and 60% of the visits to these reports were transmitted to the electorate with a more conservative bias, which made up 10% of the electorate. Even though fake news does not have a significant number within the corpus of election news, it has been able to influence the political system and accentuate political polarization. The use of fabricated fake news is an ancient phenomenon, but social networks and technological advances have taken disinformation to a level never seen before (DELMAZO; VALENTE, 2018 apud RUEDIGER; GRASSI, 2018, p.13).

Fake news has devastating power, and has already caught the attention of the Electoral Justice, which prefers to use the term “Misinformation”. However, fake news, especially in election periods, has always occurred. The greatest danger lies in software and programs that identify the ideal targets for receiving this misinformation. As well as bots, which assist in the formation of echo chambers enabling a greater dissemination of fake news.

3. ARTIFICIAL INTELLIGENCE AND ITS INFLUENTIAL ROLE IN ELECTIONS

According to an experiment conducted at Oxford University, in conjunction with the Alan Turing Institute and the Department of Political Economics at Kings University in London in 2017, political behaviour increasingly happens on digital platforms, where people are presented with a variety of social information. (HALE et al, 2017, p.1).

This experiment sought to understand how “social information” shapes the political participation of voters. He explains that social information is what people are doing, or have done, both in the digital world and in the real world and has a long history in Social Psychology. However, it is difficult to observe what a large number of people are doing offline. On the other hand, in the digital world, “many online platforms clearly indicate how many people have liked, followed, retweeted, signed petitions, or otherwise taken various actions.” (HALE et al, 2017, p.2)

The study concludes that the structure of digital platforms can have significant effects on users’ political behaviors. Similarly, the study suggests that social information can “exacerbate the turbulence in political mobilization” (HALE et al, 2017, p.16).

In 2012, another study was published by the Department of Political Science at the University of California (USA). The experiment was conducted in a controlled manner through messages of political mobilization delivered to 61 million Facebook users during the 2010 U.S. Congressional elections. (BOND, 2012, p.1):

“The results show that the messages directly influenced the political self-expression, the search for information and the real-world voting behavior of millions of people. In addition, the messages influenced not only the users who received them, but also the friends of the users and the friends of friends. The effect of social transmission on real-world voting was greater than the direct effect of the messages themselves, and almost the entire broadcast occurred among “close friends” who were more likely to have a face-to-face relationship. These results suggest that strong ties are key to spreading online and real-world behavior on human social networks.” (BOND, 2012, p.1).

The results of this study have many implications. Political mobilization in the network works by provoking political self-expression, and also inducing the collection of social information. It has also been shown that social mobilization in online networks is significantly more effective than informational mobilization. The fact that the information seen online from familiar faces can dramatically improve the effectiveness of a mobilization message. In addition to the direct effects of online mobilization, the importance of social influence was shown to effect the change in users’ behavior (BOND, 2012, p.5).

Thus, when trying to influence the behavior of a user outside the digital world, one must pay attention “not only to the effect that a message will have on those who receive it, but also to the probability that the message and the behavior it stimulates will spread from person to person through the social network”. (BOND, 2012, p.5). This experiment suggests that online messaging can influence a variety of offline behaviors, and its authors were already concerned in 2012 with understanding the role of social media in society. Remembering that:

Societies are complex systems that tend to polarize into subgroups of individuals with dramatically opposed perspectives. This phenomenon is reflected – and often amplified – in online social networks, where, however, humans are no longer the only participants and coexist with social bots – that is, software-controlled accounts (STELLA; FERRARA; DOMENICO, 2018, p.1,)

The data consolidated information obtained by nearly 4 million Twitter messages generated by nearly 1 million users. In this analysis, the two polarized groups of Independents and Constitutionalists were identified and the structural and emotional roles played by social bots were quantified. Bots have been shown to act in areas of the social system to attack influential human beings from both groups. In this referendum, independents were bombarded with violent content, increasing their exposure to negative and inflammatory narratives, exacerbating online social conflicts. (SUNSTEIN, 2017, p. 7; STELLA; FERRARA; DOMENICO, 2018, p.1). These findings emphasize the importance of developing measures to unmask these forms of automated social manipulation, as social media can profoundly affect the voting behavior of millions of people:

By interfering in developing debates on social networks, robots are directly affecting political and democratic processes through the influence of public opinion. Its action can, for example, produce an artificial opinion, or unreal dimension of a given opinion or public figure, by sharing versions of a given theme, which spread in the network as if there were, among the portion of society represented there, a very strong opinion on a given subject (Davis et al., 2016 apud GRASSI et al., 2017, p.14).

It is possible that the reader thinks that their opinion about a particular candidate will not change due to the influence of messages produced by bots. However, the target of this social hacking are undecided voters. As Grassi et al., the coordinated sharing of a certain opinion explains, it gives it “an unreal volume […] influencing undecided users on the subject and strengthening the most radical users in the organic debate, given the more frequent location of robots at the poles of political debate.” (2017, p.14)

3.1 CONSEQUENCES FOR ELECTION CAMPAIGNS

Fake News, bots, and echo chambers can change the course of an election, as has been extensively discussed and shown in the previous chapter. Cambridge Analytics itself used the following line in a sales presentation of its services: “We are a behavior change agency. The holy grail of communications is when you start to change behavior [das pessoas].” (PRIVACIDADE…, 2019)

Echo Chambers can lead people to believe in falsehoods, and it can be difficult or impossible to correct them. One illustration, made by Professor Sunstein, is the belief that President Barack Obama was not born in the United States. As falsehoods, this is not the most damaging, but reflected and contributed to a policy of suspicion, mistrust and sometimes hatred.

Another, more damaging example is the set of falsehoods that helped produce the vote for “Brexit”[6] in 2016. Fake news about Turkey supposedly being part of the European Union and consequently opening up the UK to mass immigration of Turks into the country was essential to the outcome of the referendum. In the 2016 presidential campaign in the United States, falsehoods spread rapidly on Facebook and are still everywhere. (SUNSTEIN, 2017, p.20; PRIVACIDADE…, 2019). According to Sunstein ,”to this day, social media has not helped produce a civil war, but that day will probably come. They have already helped prevent a coup in Turkey in 2016” (SUNSTEIN, 2017, p.20):

There is a question about individual freedom. When people have multiple options and the freedom to choose between them, they have freedom of choice, and that is extremely important. Freedom requires certain background conditions, allowing people to expand their own horizons and learn what is true. It implies not only satisfaction of any preferences and values that people have, but also circumstances favorable to the free formation of preferences and values. But if people are qualifying in like-minded communities, their own freedom is at risk. They are living in a prison of their own creation. (SUNSTEIN, 2017, p.20).

When undecided voters do not know what is true, their choices can be compromised during the electoral process, which would directly affect democracy and the right to choose to vote, and may even change the outcome of an election.

3.2 CONSEQUENCES IN BRAZIL

The electoral process that occurred in 2018 constitutes a time frame for understanding the use of digital platforms for electoral propaganda as well as combating the dissemination of disinformation.

3.2.1 INTERACTION WITH BOTS

The Directorate of Public Policy Analysis of FGV[7] (FGV-DAPP) did some case studies to analyze the presence of bots in important political situations in Brazil, such as: the debate of Rede Globo on October 2, 2014 with the presidential candidates in the first round of elections; the debate of Rede Globo on October 24, 2014 with presidential candidates Dilma Rousseff and Aécio Neves, who were vying for the second round of elections; the pro-impeachment demonstrations held on March 13, 2016; the debate of Rede Globo with the candidates for mayor of São Paulo on September 29, 2016; the general strike on April 28, 2017 and the vote on the Labor Reform in the Senate on July 11, 2017 (GRASSI et al., 2017, p.16)

The FGV professor explains how data are collected:

The masses of data collected by FGV/DAPP are composed of metadata — information about the data itself — and through them, we explore the possibilities of identifying accounts that have acted automatically during the periods of the analyses. Thus, we identified that the metadata named generator refers to the platform that generates the content of the tweet, which is very useful for detecting robots. (GRASSI et al., 2017, p.16)

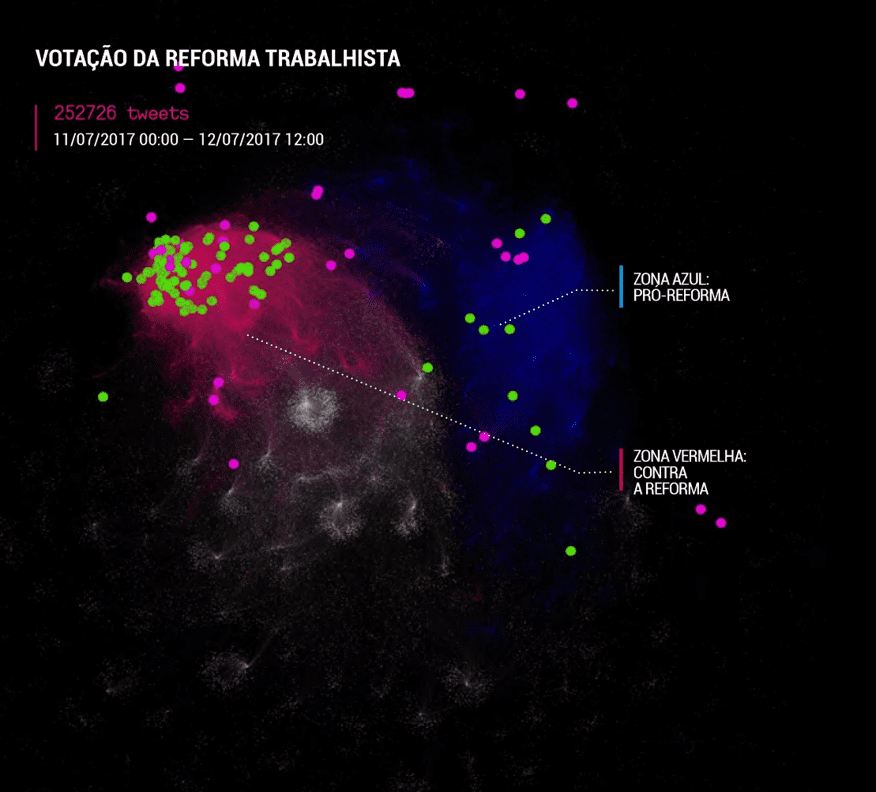

In order to illustrate the operation of the robots in question, FGV elaborated a graph (FIG. 1), with the results obtained from the analysis made during the vote on the Labor Reform in the Senate. In the chart, the pink dots represent accounts that used suspicious generators (GRASSI et al., 2017, p.17) and green dots, accounts that generated at least twice two consecutive tweets in less than one second (p.18). In this case, as in the others, robots are present at both ends of the debate, “being in this analysis in greater numbers at the pole contrary to reform” (p. 23).

Figure 1: Labour Reform Vote

These analyses show that automated actions of bots have been effective in conducting public debate, “increasingly present in networks, directly influencing moments of inflection and determinants for the future”. (GRASSI et al., 2017, p.26).

Due to the success of this study, called Robôs, Redes Sociais e Política No Brasil, which detected the presence of robots on Twitter (GRASSI et al., 2017), FGV DAPP was invited to participate in the Advisory Council on Internet and Elections[9] of the Superior Electoral Court (TSE). (RUEDIGER; GRASSI, 2018, p.4). In July 2018, the Digital Democracy Room was launched in Rio de Janeiro, which[10] aimed to “monitor public debate on the web and identify actions of disinformation, threats and illegitimate interventions in the political process, with the publication of daily and weekly analyses”. FGV DAPP, through a hotsite and[11] an application #observa2018, dissected the analysis of the networks. (RUEDIGER; GRASSI, 2018, p.5).

Among these publications, an analysis conducted during the week of August 8-13, 2018, FGV DAPP “detected, through its own methodology of robot identification, the presence of 5,932 automated accounts in the debate on the elections and presidential candidates. These profiles generated 19,826 publications” (RUEDIGER, 2018, p.4) only on the social network Twiter:

In 2018, Facebook announced actions to protect the elections in Brazil. Among the commitments were the fight against disinformation; increased ad transparency on the platform; the removal of impostor accounts; and the removal of digital saints used to deceive voters by placing one candidate’s photo and party number of another. These and other measures have been taken to ensure the authenticity of the content published on the platform (RUEDIGER; GRASSI, 2018a, p.16)

But that doesn’t seem to be enough. Fgv-DAPP analysis during the 2018 election campaigns shows that “the interferences promoted by robots often occurred in an articulated and synchronized manner, from botnets (robot networks)” (RUEDIGER; GRASSI, 2018a, p.27). The following is the results of the analysis performed:

In the pre-campaign period, at least three robot networks were responsible for publishing 1,589 tweets in one week. The messages sought, in general, to boost and/or demobilize candidacies, especially in the centers of greater polarization: Jair Bolsonaro-Lula/Haddad.

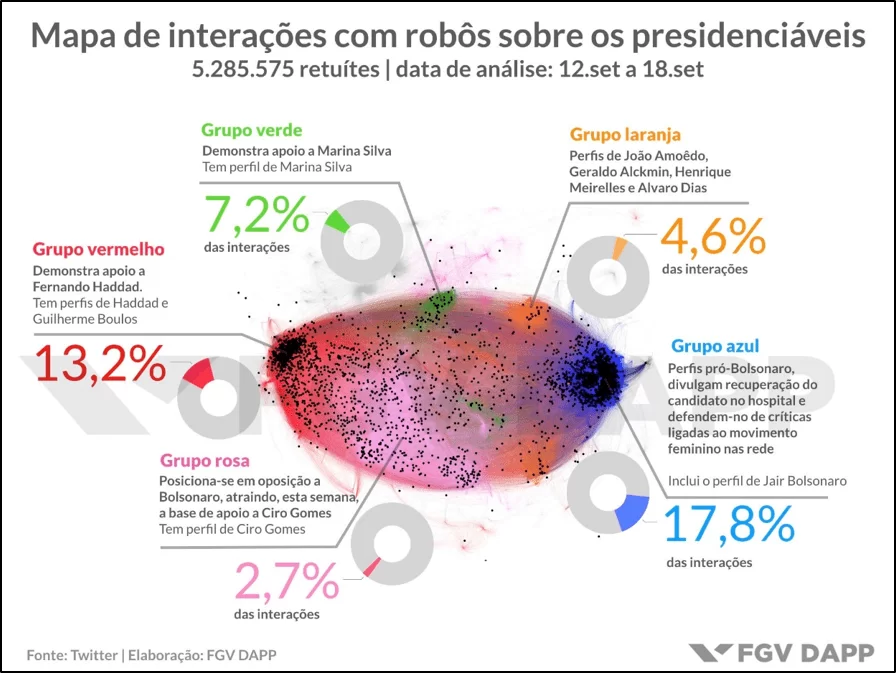

The pro-Bolsonaro and pro-Fernando Haddad bases were also the ones that presented the most interference of robots during the campaign period. From September 12 to 18, for example, 7,465,611 tweets and 5,285,575 retuites were collected regarding the candidates. Within this database, FGV DAPP’s robot detection methodology found 3,198 automated accounts, which generated 681,980 interactions — 12.9% of the total graph retweets below.

On the right, automated accounts accounted for 17.8% of the retweets of the group favorable to the federal deputy of the PSL; on the left, they accounted for 13.2% of the interactions aligned with the PT candidacy and, to a lesser extent, the candidacy of Guilherme Boulos of the PSol. (RUEDIGER; GRASSI, 2018a, p.28)

Figure 2: Interactions with robots in the 2018 Elections

WhatsApp has a private communication nature and could not be analyzed by FGV-DAPP, however, it noted the following:

Given the particularities of the platform, such as the nature of private communication, of reduced social scope (family, friends, co-workers), and the lower direct interaction between influencers and ordinary citizens, there was a different phenomenon of dispersion and viralization of political content than observed in other recent scenarios (national and international), in which Facebook and Twitter were in greater prominence. The many social networks now popular among Brazilians, widely used to debate the elections and the political conjuncture, present particularities regarding the process of production and interaction with content. All information propagation strategies, however, are adapted to the properties of each platform, and with this are multiple ways of manifesting misinformation and sharing of false information. Not only from links or pages that simulate journalistic activity, but with intense use of videos, tutorials, blogs, memes, apocryphal texts and sensational publications; various subtypes of Fake News, therefore. (RUEDIGER; GRASSI, 2018a, p.29)

Although it has not been proven that interactions with bots or the spread of fake news have altered the outcome of the 2018 Presidential Election in Brazil, it is necessary to be aware of this possibility. As has already been demonstrated, the now defunct Cambridge Analytics company succeeded in electing the campaign agency candidate who hired it in the 2016 U.S. Presidential Election.

The current President of the Republic was elected with a tiny time of electoral propaganda on TV, which shows that he would have been elected by his campaign on social platforms. There has been a “structural change in the way successful campaigns have been organised — social networks have become the main axis of dialogue between voters and elected officials” (RUEDIGER; GRASSI, 2018a, p.29).

On the other hand, Ruediger and Grassi report positive consequences in this digital scenario. Among them stand out:

1) the greater demand for transparency in the institutional relations of politics;

2) the ability to quickly act and interfere in the decision-making process of state agents and elected representatives, through direct contact with them over the Internet;

3) and the expansion of the potential role that the citizen can play in the conduct of public policies and acts that interest him. Accelerated feedback and the speed of transmission, sharing and production of content, improvements in the way journalistic activity and political debate respond to these new paradigms (2018a, p.29-30) also become imperative.

3.2.2 SPREAD OF FAKE NEWS

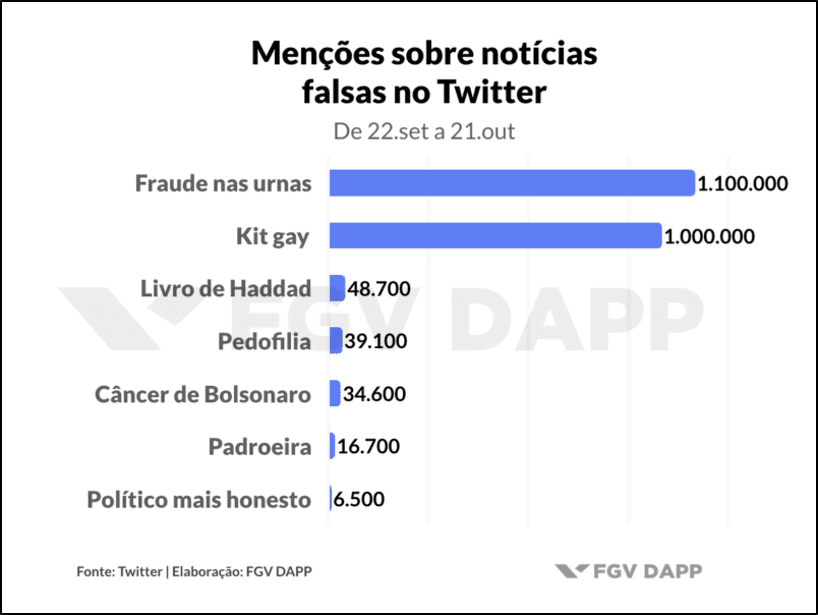

The FGV-DAPP Analysis conducted during the 2018 election period concluded that there was “no automated and mass distribution of fake news”. It states that disinformation was present, “but robots were not the most responsible for its dissemination.” In the week of the knife attack against Bolsonaro, for example, only 0.9% of the interactions were automated from robots (RUEDIGER; GRASSI, 2018a, p.29). According to the analysis the fake news that stood out most were those related in fig. 3:

Figure 3: Fake News

3.3 CONSEQUENCES FOR DEMOCRACY

In 2016, the U.S. presidential election drew attention to new ways of doing politics and political campaigns through digital platforms. The elections involved cases of fake news that had worldwide repercussions, such as “the alleged influence of Russian hackers who through the Internet Research Agency would have generated fake content” and reached millions of voters through Facebook and Twitter. (RUEDIGER; GRASSI, 2018, p.12)

Before that there was a referendum in the United Kingdom on its withdrawal from the European Union. In investigations conducted in the period from 2017 to early 2019 it was proven that the company Cambrigde Analytics together with other agencies influenced the result of the votes, directing their false messages to a certain group of people. The company was able to profile voters using Facebook user data. The Facebook scandal was reported worldwide in early 2018 with the publication of data found by British journalist Carole Cadwalladr (ROSENBERG; CONFESSORE; CADWALLADR, 2018) who has since been talking about this new threat to democracy. Parliament finally closed its investigations and published a report in early 2019. It is worth highlighting some conclusions about the use of these platforms:

The electoral law is not fit for purpose and needs to be changed to reflect changes in campaign techniques […]. There must be: absolute transparency of online political campaigns, including clear and persistent marks on all paid political ads and videos, indicating the source and advertiser; a category introduced for digital spending on campaigns; and explicit rules around the role and responsibilities of designated advocates.

Political advertising items must be publicly accessible in a searchable repository – who’s paying for ads, which organizations sponsor the ad, who’s being targeted by ads – so that members of the public can understand the behavior of individual advertisers. It must be run independently of the advertising industry and political parties. (HOUSE…, 2019, p.24)

It is noted that “democratic societies around the world have experienced in recent years the emergence of a new communication actor to successfully shape, successfully or not, public opinion in political debates, elections, referendums and national crises: disinformation” (RUEDIGER; GRASSI, 2018a, p.4). The spread of fake news-based campaigns across digital platforms at key moments in society, along with the action of artificial intelligence in controlling automated account bots, “has become a key tool in the strategy of certain groups to attract digital traffic, engage or even influence debates, demobilize opponents and generate false political support” (RUEDIGER; GRASSI, 2018a, p.4) becoming a major threat to democracies around the world.

Another danger that digital platforms present to democracy is the ease that exists for the formation of echo chambers, already discussed in this work. Isolated groups increase political polarization and hatred, giving even more fuel to the functioning of bots and the spread of fake news.

But the unifying question to the fullest extent will be the various problems for a democratic society that can be created by the power to filter. Democracies may or may not be fragile, but polarization can be a serious problem, and it is increased if people reside in different universes of communication – as, in fact, they sometimes seem to do in the United States, the United Kingdom, France, Germany and elsewhere. There is no doubt that the modern communication environment, including social media, contributes to the rise of partisanship. (SUNSTEIN, 2018, p.36)

Any extremist group, terrorist, or any political party that has the means to control social media platforms would then have the power to govern after influencing a theoretically democratic election, which would present a series of threats to the democratic system.

In this sense, Carole Cadwalladr, in the documentary Privacidade Hackeada, makes a moving appeal to reflect on how social networks can be used to spread evil and hatred, polarizing countries:

Looks like we’re entering a new era. We can see that authoritarian governments are on the rise. And they’re using this politics of hate and fear on Facebook. Look at Brazil. There is this right-wing extremist who was elected and we know that WhatsApp, which is part of Facebook, was clearly implicated in the spread of Fake News. And look what happened in Myanmar. There is evidence that Facebook was used to incite racial hatred that caused genocide. We also know that the Russian government was using Facebook in the US. There is evidence that the Russians created fake Black Lives Matter memes, and when people clicked on them it was directed at pages where they were actually invited to protests that were organized by the Russian government. And at the same time they were setting up pages that targeted opposing groups, such as Blue Lives Matter. The issue is to feed fear and hatred to turn the country against itself. Divide and Conquer. These platforms that were created to connect us are now used as weapons and it is impossible to know what is what is happening on the same platforms where we talk to our friends or share pictures of babies. Nothing is what it seems” (PRIVACIDADE …, 2019, 96 min)

4. ELECTORAL JUSTICE IN BRAZIL

The Electoral Justice has as its scope to carry out the “electoral truth”, for the realization of democracy (VELLOSO, 1996, p.9 apud VELLOSO; AGRA, 2016), this means that the Electoral Justice is responsible for “enabling the expression of the will of voters, operationalizing all electoral procedures so that they develop in harmony and transparency, without being disturbed by the popular sovereignty” (VELLOSO; AGRA, 2016).

The Electoral Justice is responsible for disciplining and organizing the exercise of suffrage in order to realize popular sovereignty. It is through the elections that the free formation of the voter’s will and the equality between the candidates is guaranteed, ensuring brazilian democracy.

Art. 105 of the Law of Elections (9.504/97) says that Superior Electoral Court has normative function. “You may issue all the instructions necessary for your faithful execution, heard, in advance, in a public hearing, the delegates or representatives of the political parties.” Thus, the TSE can edit rules for controlling the use of digital platforms for election campaigns.

4.1 ELECTORAL JUSTICE RESPONSIBILITY IN THE USE OF DIGITAL PLATFORMS

In 2017, the TSE amended Law No. 9,504/1997 (Wording given by Law No. 13,488, 2017), giving a new wording to art. 57 so that digital platforms such as Facebook, Twitter and others could be used in election campaigns from August of the election year. Thus, during the campaign for the Presidential Elections in 2018 much was said in favor of this method that with little financial investment, generates more results.

The great analysis of the media, post accounting of the polls, focused on how the presidential candidate with the longest time on TV (SHALDERS, 2018), Geraldo Alckmin (PSDB) was not even elected to the second round, while the elected candidate, Jair Bolsonaro (PSL), who had only 8 seconds in election time obtained much higher results. The President himself has often used his space on social networks to praise the fact that his election campaign cost far less than that of other high-weight candidates. Similarly, the candidate João Amoedo ( partido NOVO), hitherto unknown to most of the Brazilian population, also obtained expressive results without participation in any debate promoted by television channels and making use of social media, coming in 5th place in the first round, ahead of other better known candidates. Thus, the 2018 elections reflected the changes in the political campaign scenario that from that moment on also happened through digital platforms.

However, after the Scandal of Facebook and the now defunct British company Cambridge Analytica, the world realized how profitable the personal data market can be. In Brazil, there are laws that prohibit and criminalize the purchase of[15] votes, but these laws no longer contain the current reality of election campaigns. You can spend any amount of money on ads on Facebook, Google or YouTube, and no one will know, because that data is inaccessible. What you have are accountability with the TSE, which would allow you to know how much was spent on these ads, but the content of the same will never be revealed. Carole Cadwalladr, in her presentation at a TED conference in April this year, states:

… what happens on Facebook stays on Facebook, because only we see the content of our page, which then disappears. It’s impossible to research anything. We have no idea who saw which ads, what their impact was, or what data was used to choose the target audience. Not even who placed the ads, how much money was spent, or what nationality they were. (CADWALLADR, 2019)

The surprising number of studies and existing data showing the relationship between the interaction of bots influencing users during election periods, show how much “platforms should be attentive to compliance with the legal framework for election campaigns on social networks” (RUEDIGER; GRASSI, 2018, p.25). To this end, the TSE should create standards that better monitor whether the platforms are complying with the limits already provided by law. It is the Court’s responsibility to ensure the smoothness of the elections and to protect democracy. Ruediger further suggests:

… the surprising volume of interactions of candidacies, on Twitter, Facebook, YouTube and Instagram, indicate that platforms must be attentive to compliance with the legal framework for election campaigns on social networks to, in parallel, maintain users’ calls to their policies and standards (RUEDIGER; GRASSI, 2018, p.25).

The TSE should not allow election campaigns on these platforms that are inaccessible and impossible to monitor. There is no way to monitor the possible manipulation of a particular target audience by ads for this purpose. Thus, rules need to be put in place to force social platforms to do what was suggested by the British Parliament report cited above, identifying the authors of publications with electoral content.

Caldas e Caldas explain what has been done, in relation to this theme, in other parts of the world:

In this sense, the European Union created on 25 May 2018 the General Data Protection Regulation (EUROPEAN COMMISSION, 2018) after studies indicating that the spread of fake news was made from the analysis of user data (MARTENS et al., 2018 apud CALDAS; CALDAS, p.214, 2019). In this direction, on June 11, 2018, the National Council on Human Rights approved Recommendation No. 04 of June 11, 2018, which deals “with measures to combat fake news and the guarantee of the right to freedom of expression” (BRASIL, 2018b apud CALDAS; CALDAS, p.214, 2019), which recommends the approval of a Bill aimed at the protection of personal data (BRASIL, 2018th apud CALDAS; CALDAS, p.214, 2019), considering that “the production and targeting of so-called fake news today are directly related to the massive and indiscriminate collection and treatment of personal data” (BRASIL, 2018b apud CALDAS; CALDAS, p.214, 2019) (CALDAS; CALDAS, p.214, 2019).

5. HOW THE TSE HAS DEALT WITH THIS ISSUE SINCE THE 2018 ELECTIONS

In May 2019, the TSE held an International Seminar on fake news and elections together with the European Union. The seminar took place in Brasilia and had several speakers, among them: Minister Rosa Weber, President of the Superior Electoral Court (TSE), Minister Luiz Fux, Vice-President of the Supreme Federal Court (STF) and Minister Sergio Moro, Minister of Justice and Public Security.

According to the TSE website about the seminar:

During the year 2018, the Advisory Council on Internet and Elections, established by the Superior Electoral Court (TSE) on December 7, 2017, developed numerous activities related to the influence of the Internet in the elections, in particular regarding the risk of Fake News and the use of robots in the dissemination of information, actions that contributed to the expansion of the knowledge of electoral justice in the face of the theme , in order not only to prevent but also to facilitate the confrontation of the serious problem related to the dissemination of fake news during the 2018 general elections. (BRASIL, 2019f).

Still on the Seminar, the TSE recognizes its primary responsibility in protecting Brazilian democracy when it states:

Considering the complex equation of the phenomenon known as Fake News, in all branches of society, both in Brazil and abroad, it is up to the Electoral Justice to keep an eye on the problem, oriented to find more effective ways of coping in future elections. (BRASIL, 2019f).

The main objective of the seminar was to raise ways to prevent or minimize the dissemination of fake news in the 2020 municipal elections, taking into account the experience gained during the 2018 election. Thus, it was intended to compile the data, share experiences, welcome suggestions, discuss about viable measures to combat fake news, as well as promote a source of studies and proposals to be forwarded to the Electoral Court and the National Congress (BRASIL, 2019f).

In February of this year, ordinance no. 115 was published on the TSE portal, which “establishes a working group responsible for conducting studies to identify conflicts in the current norm arising from electoral reforms and propose the respective systematization” (BRASIL, 2019a). From this ordinance was born Diálogos para a construção da Sistematização das Normas Eleitorais with the aim of:

… identify and systematize the relevant legislation, pointing out inconsistencies, omissions and/or overregulation, with the Federal Constitution as a parameter and from the legislative texts, resolutions and even jurisprudential discussions, in order to have as a result a more concise, coherent and comprehensive text to the current reality of Brazilian democracy.” (BRASIL, 2019, p.3).

It also highlights the need for “special attention, for example, to issues that require a convergence between electoral law, technology and digital marketing”, also citing the “possibility of boosts, used for the first time in the 2018 general elections” (BRASIL, 2019, p.3). Through this study it can be seen that the TSE is already analyzing the problem of boosts and bots used by social media.

Law 9.504/97, which underwent a mini-reform in 2017 authorizing the use of digital platforms in election advertisements, still presents several inconsistencies. According to the law, the boost is forbidden to individuals and limited to candidates or coalitions. Thus, caldas e Caldas (p. 213, 2019) state:

… electoral law experts assess:

With the paid boost, loopholes are opened for illicit abuse of economic power, abuse of political power, abuse of the media, prohibited propaganda (carried out by churches, trade unions and legal entities in general), fake news, among other possible illicit acts. Some of these illegals may lead to the impeachment of the application registration, diploma (if elected) and loss of the mandate. (ALMEIDA; LOURA JUNIOR, 2018 apud CALDAS e CALDAS)

The original text had in its art. 57-B, §6 provision that provided for more effective measures to inhibit fake news during the elections. However, the same was vetoed by the then President of the Republic Michel Temer. The text of §6 originally provided for (CALDAS e CALDAS, p. 213, 2019):

The denunciation of hate speech, dissemination of false information or offense to the detriment of a party or candidate, made by the user of an application or social network on the Internet, through the channel made available for this purpose in the provider itself, will result in a maximum suspension of the reported publication by a maximum of twenty-four hours until the provider makes sure that the user’s personal identification has been published , without providing any data from the reported to the whistleblower, except by court order. (BRASIL, 2017, Grifo nosso apud CALDAS e CALDAS).

The justification for the veto, according to Caldas e Caldas is the existence of “fear that a legislation to crack down on fake news can generate a restriction on freedom of expression” especially in a scenario “intense political polarization, in which political passions cause the boundary between truth, objective and subjective point, to fade.” (CALDAS e CALDAS, p. 214, 2019)

Conclude Caldas e Caldas that, despite Art. 25 of Resolution No. 23,551/2017 TSE contain “device to try to prevent the spread of fake news… the speed of justice will probably not be enough to prevent this occurrence… precisely because its characteristic is the speed in propagation.” (CALDAS e CALDAS, p. 214, 2019).

It is realized that finding a plausible solution will not be easy. Any more drastic measure of inhibiting fake news or preventing certain ways of using digital platforms will possibly confront freedom of expression. A paradox that is difficult to solve. However, the Court has taken some steps.

The TSE portal and its pages on social networks, have illustrative and educational videos about “the myths in elections” and even tips for voters not to lose the title of voter. However, there was no video until September this year, alerting and raising voters’ awareness of the danger of fake news. The TSE then began to broadcast at the beginning of September, on its YouTube channel[16] , the video series Coping with Disinformation[17]” (BRASIL, 2019b). This series, to date (October 21, 2019), has 6 videos explaining how effective and fast the TSE was in solving the issue of fake news about the operation of electronic voting machines, and also teaching the voter not to use the term fake news, for having a pejorative and non-concrete sense, according to the experts interviewed in the videos. Moreover, according to them the term is ambiguous, imprecise and disqualifies the press, being used by politicians for this purpose. Thus, it is recommended to use the term “Misinformation”.

As can be seen, the videos produced by the TSE praise the court itself stating how quickly the Court has acted in the fight against misinformation, in addition to explaining why the use of the term fake news is wrong. Although they have a few seconds of tips on how to reduce misinformation, the videos are not punctual in clarifying to the voter about the existence of automated accounts with potential influencer in public opinion, explaining for example, the operation of bots. At no time do the videos produced by the Court clarify the voter about the consequence that misinformation can bring to the outcome of an election. In addition to not being available in a place of easy knowledge of the voter. How many voters will watch these videos? So far, there aren’t even 500 views of them.

In another attempt to inhibit the spread of fake news, this year 2019, the Legislative Branch added art. 326-A to Law 4.737/65 (Electoral Code) in verbis:

Art. 326a. Give cause to the initiation of police investigation, judicial proceedings, administrative investigation, civil inquiry or administrative misconduct, attributing to someone the commission of a crime or an infraction of which he knows innocent, for electoral purpose:

Penalty – imprisonment, from 2 (two) to 8 (eight) years, and fine.

§ 1 – The penalty is increased from the sixth part, if the agent uses anonymity or supposed name.

§ 2 – The penalty is reduced by half, if the imputation is a misdemeanor practice.

§ 3 – The same penalties of this article shall be incursed by those who, proven to be aware of the innocence of the accused and for electoral purposes, disclose or propose, by any means or form, the act or fact that was falsely attributed to hi[18]m. (BRASIL, 1965)

The President of the Republic vetoed § 3 claiming that “the conduct of slander with electoral purpose is already typified in another provision of the Elector[com]al Code penalty of six months to two years”, thus injuring the “principle of proportionality.” However, the veto was overturned by Congress in August 2019, typifying yet another electoral crime. (Congresso…, 2019).

Also in August, the TSE launched the Program to Combat Disinformation with a Focus on Elections 2020. As explained on the Court’s website:

The program was organized in six thematic axes. The first, “Internal Organization”, aims at the integration and coordination between the levels and areas that make up the organizational structure of the Electoral Justice and the definition of the respective attributions against disinformation.

Another axis that will be worked on is “Media and Information Literacy”, which aims to empower people to identify and check a misinformation, in addition to stimulating understanding about the electoral process.

On the topic “Containment to Disinformation”, the idea is to institute concrete measures to discourage actions of proliferation of false information. With the axis “Identification and Verification of Disinformation”, the TSE intends to seek the improvement and new methods of identifying possible practices of dissemination of fallacious content.

The axes “Improvement of the Legal System” and “Improvement of Technological Resources” are also part of the program launched by the TSE.

Before the signing of the term of accession, Minister Rosa Weber announced the launch of a website that will ga[19]ther information about disinformation and also a book that is the result of the result of the debates that took place at the International Seminar Fake News and Elections, held in May this year, in the TSE. The work is available on the Court Portal[20]. (BRASIL, 2019e)

On October 22 of this year, Google, Facebook, Twitter and WhatsApp joined the program. At the time, Minister Rosa Weber “classified as harmful the effect caused by disinformation to democracy, the electoral process and society in general.” And he said the program’s focus “is to provoke the involvement of all social spheres in combating the dissemination of misleading information on the Internet.” (BRASIL, 2019c). He also said that these companies have the capacity to improve technologies that identify “the misuse of bots and other automated content dissemination tools.” The President of the TSE concluded his speech with the following longing:

I hope that we can reach the end of the 2020 elections convinced that the electoral process has not suffered greater influences from the phenomenon of disinformation and that this was due, and much, to the contribution of the partners of our program. (BRASIL, 2019c).

The Court’s programme seems to be already bringing some results from the platforms themselves that have joined it. On October 29, 2019, the social network Twiter met with the program’s management group to “align joint strategies” (BRASIL, 2019d). The next day, the press announced that the social network decided to ban all kinds of political propaganda (TWITER…, 2019). However, it seems that the concern of Electoral Justice is aimed at combating misinformation only, nothing mentioning the ability that companies like the now defunct Cambridge Analytics have to classify political profiles through voter data social media users.

6. CONCLUSION

Analyzing social networks as influencers of political debate is fundamental to understanding the stage in which democracies meet (RUEDIGER; GRASSI, 2018) and reflect on the Brazilian electoral future.

Thus, in order for social networks to remain a democratic space of opinion and information, it is necessary to understand the organicity of the debates, identifying those responsible for coordinated actions to boost messages. Networks need to become more transparent, seeking to understand the interests behind the hiring of these automation services and spread disinformation. Expanding the ability to identify and crack down on malicious automation of social media profiles should be a priority. (GRASSI et al., 2017).

Meanwhile, the Electoral Court should promote awareness-raising policies for voters using digital platforms, on the mechanisms used for manipulation, from the formation of echo chambers, to the use of artificial intelligence and bots, which intentionally direct their messages to specific recipients. This allows people to filter what they see, and also be able to limit the increasing power of providers to select each user individually, analyzing them based on social information acquired in their profiles on these platforms.

Cass Sunstein is correct when he insists that, in a diverse society, the system of freedom of expression and the right to privacy requires much more than restrictions on government censorship and respect for individual choices. While censorship is a threat to democracy and freedom, a well-functioning system of free expression must meet two distinct requirements: First, people should be exposed to materials they would not have chosen in advance, thus avoiding the formation of echo chambers. And secondly, issues should involve topics and views that users of digital platforms have not sought, and may not even agree with, but which are important to avoid polarization and extremism. The Harvard professor explains that he does not suggest that the government force people to see things they want to avoid. But he says that unplanned and unforeseen meetings are central to democracy, thus making its appeal for serendipity (SUNSTEIN, 2017).

According to Caldas e Caldas (2019), fake news, or Misinformation, spreads quickly and leaves consequences for a long time. Dealing with this phenomenon requires efficient and rapid measures to reduce its spread and effects, since this dissemination can interfere in the Brazilian democratic process as seen in this work. It is also necessary to create instruments to identify the agents responsible for these episodes and tools to measure the possible damage caused in an election. In addition to measures that allow greater access control to user data, since content targeting depends on identifying the profiles of a target audience.

It is extremely urgent and important that the Electoral Justice together with the Legislative Branch, create means of protection of digital data, in order to prevent new Cambridge Analytics from emerging and use their analysis of profiles to direct messages to chosen targets in order to interfere in the democratic process of the elections.

Until these measures are taken, voters need to be made aware. Brazil lives the era of political polarization. It’s a fact put. It makes no difference whether the individual is in favor of a left or right-wing policy. The tendency of this is not to support an idea that has arisen from a party contrary to his vision, even if he agrees with it. In this way, it seems easy to manipulate a target group so that hatred and chaos are spread. And as seen in this paper, it would even be possible to change the outcome of an election.

Thus, it is understood that the action of Electoral Justice is timid in view of the clipping brought in this debate, that is, to educate voters using these platforms about the dangers of data manipulation in social networks, in addition to the focus that has been given to awareness about disinformation.

Fake news has always existed, contrary to the ability that some software has to analyze the profile of each voter user. This ability is a novelty of this digital age and has immense potential to target a particular group and can even manipulate the outcome of an electoral process.

The Electoral Justice must appreciate the smoothness in the elections. Thus, it is necessary to make the population aware of these means of manipulation. Educate people to protect their data and question information they receive, especially on social networks. Who knows, this polarization diminish one day, and with it, the potential for manipulation existing in social platforms.

REFERENCES

BECKER, Lauro. Site, blog, landing page, hotsite ou e-commerce? Entenda a diferença! Orgânica Digital, jul. 2016. Disponível em: https://www.organicadigital.com/blog/site-blog-landing-page-hotsite-ou-e-commerce-entenda-a-diferenca/. Acesso em: 20 out. 2019.

BITTAR, Eduardo C. B. Metodologia da pesquisa jurídica. 15. ed. São Paulo: Saraiva, 2017. ISBN: 9788547220037.

BOND, Robert M. et al. A 61-million-person experiment in social influence and political mobilization. Nature, n. 489, p. 295-298, set. 2012. DOI: https://doi.org/10.1038/nature11421. Disponível em: https://www.nature.com/articles/nature11421. Acesso em: 8 jan. 2019.

BRASIL. Presidência da República. Casa Civil. Subchefia para Assuntos Jurídicos. Constituição da República Federativa do Brasil de 1988. Portal da Legislação. Disponível em: http://www.planalto.gov.br/ccivil_03/constituicao/constituicao.htm. Acesso em: 10 mai. 2019.

BRASIL. Presidência Da República. Casa Civil. Subchefia Para Assuntos Jurídicos. Lei Nº 9.504, de 30 de setembro de 1997. Lei da Eleições. Portal da Legislação. Disponível em: http://www.planalto.gov.br/ccivil_03/leis/l9504.htm Acesso em: 10 mai. 2019.

BRASIL. Presidência Da República. Casa Civil. Subchefia Para Assuntos Jurídicos. Lei Nº 4.737, de 15 de julho de 1965. Código Eleitoral. Portal da Legislação. Disponível em: http://www.planalto.gov.br/ccivil_03/leis/l4737.htm Acesso em: 22 out. 2019.

BRASIL. Tribunal Superior Eleitoral. Diálogos para a construção da Sistematização das Normas Eleitorais. 2019. Disponível em: http://www.tse.jus.br/legislacao/sne/arquivos/gt-iii-propaganda-estudos-preliminares. Acesso: em: 12 ago. 2019.

BRASIL. Tribunal Superior Eleitoral. Legislação. 2019a. Disponível em: http://www.tse.jus.br/legislacao/compilada/prt/2019/portaria-no-115-de-13-de-fevereiro-de-2019 Acesso: em: 12 ago. 2019.

BRASIL. Tribunal Superior Eleitoral. Portal da Comunicação TSE. 2019b. Disponível em: http://www.tse.jus.br/imprensa/noticias-tse/2019/Setembro/enfrentamento-a-desinformacao-tse-veicula-serie-de-cinco-videos-sobre-o-tema?fbclid=IwAR2JRU6Yu0u-gRV1-wACl3K4Eic8l-jtllgIjG64kpTVwW1so6Xi8NSx2yY. Acesso em: 21 out. 2019.

BRASIL. Tribunal Superior Eleitoral. Portal da Comunicação TSE. 2019c. Disponível em: http://www.tse.jus.br/imprensa/noticias-tse/2019/Outubro/google-facebook-twitter-e-whatsapp-aderem-ao-programa-de-enfrentamento-a-desinformacao-do-tse. Acesso em: 30 out. 2019.BRASIL. Tribunal Superior Eleitoral. Portal da Comunicação TSE. 2019d. Disponível em: http://www.tse.jus.br/imprensa/noticias-tse/2019/Outubro/grupo-gestor-do-programa-de-enfrentamento-a-desinformacao-recebe-representante-do-twitter-para-alinhar-estrategias-conjuntas. Acesso em: 30 out. 2019.

BRASIL. Tribunal Superior Eleitoral. Portal da Comunicação TSE. 2019e. Disponível em: http://www.tse.jus.br/imprensa/noticias-tse/2019/Agosto/tse-lanca-programa-de-enfrentamento-a-desinformacao-com-foco-nas-eleicoes-2020. Acesso em: 30 out. 2019.

BRASIL. Tribunal Superior Eleitoral. Seminário Internacional sobre Fake News e Eleições. 2019f. Disponível em: http://www.tse.jus.br/hotsites/seminario-internacional-fake-news-eleicoes/. Acesso: em: 12 ago. 2019.

CADWALLADR, Carole. Revealed: 50 million Facebook profiles harvested for Cambridge Analytica in major data breach. The Guardian, 17 mar. 2018. Disponível em: https://www.theguardian.com/news/2018/mar/17/cambridge-analytica-facebook-influence-us-election Acesso em: 26 abr. 2019.

CADWALLADR, Carole. Facebook’s role in Brexit — and the threat to democracy. TED Ideas worth spreading, Abr, 2019. Disponível em: https://www.ted.com/talks/carole_cadwalladr_facebook_s_role_in_brexit_and_the_threat_to_democracy/transcript?language=en. Acesso em: 4 ago. 2019.

CALDAS, Camilo O. L.; CALDAS, Pedro N. L. Estado, democracia e tecnologia: conflitos políticos e vulnerabilidade no contexto do big-data, das fake news e das shitstorms. Perspectivas em Ciência da Informação, Belo Horizonte, v.24, n. 2, abr./jun. 2019. Disponível em: http://www.scielo.br/scielo.php?script=sci_arttext&pid=S1413-99362019000200196&tlng=pt. Acesso em: 20 out. 2019.

Congresso derruba veto e mantém pena para “fake news” eleitoral. Exame, ago. 2019. Disponível em: https://exame.abril.com.br/brasil/congresso-mantem-veto-a-prestacao-de-assistencia-odontologica-obrigatoria/. Acesso em: 22 out. 2019.

FERRARA, Emilio, et al. The Rise of Social Bots. Communications of the ACM, New York, v. 59 n. 7, jul. 2016. Disponível em: https://cacm.acm.org/magazines/2016/7/204021-the-rise-of-social-bots/fulltext#R24. Acesso em: 20 out. 2019.

GRASSI, Amaro. Et al. Robôs, redes sociais e política no Brasil: estudo sobre interferências ilegítimas no debate público na web, riscos à democracia e processo eleitoral de 2018. FGV DAPP, Rio de Janeiro, 20 ago 2017. Disponível em: http://hdl.handle.net/10438/18695. Acesso em: 12 jan. 2019.

HALE, Scott et al. How digital design shapes political participation: A natural experiment with social information. PLoS One, 27 abr. 2018. DOI: 10.1371/journal.pone.0196068. Disponível em: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0196068. Acesso em: 8 jan. 2019.

HOUSE OF COMMONS (Reino Unido). Disinformation and ‘fake news’: Final Report. Londres: House of Commons, 2019, 111 p. Disponível em: https://publications.parliament.uk/pa/cm201719/cmselect/cmcumeds/1791/1791.pdf. Acesso em: 19 fev. 2019.

MONTEIRO, Cláudia S.; MEZZAROBA, Orides. Manual de metodologia da pesquisa no Direito. 7. ed. São Paulo: Saraiva, 2017. ISBN: 9788547218720.

MUNGER, Kevin. Influence? In this economy? Tech-savvy young people take internet literacy for granted, and that can cause all kinds of problems. The Outline, 17 fev. 2018. Disponível em: https://theoutline.com/post/3443/devumi-twitter-bots-savviness-gap. Acesso em: 14 jan. 2019.

NETTO, Paulo R. WhatsApp confirma ação de empresas em disparo de mensagens durante eleições. O Estado de São Paulo, 8 out. 2019. Disponível em: https://politica.estadao.com.br/blogs/estadao-verifica/whatsapp-confirma-acao-de-empresas-em-disparo-de-mensagens-durante-eleicoes/. Acesso em: 2 nov. 2019.

PRIVACIDADE Hackeada. Direção: Karim Amer, Jehane Noujaim. Produção: Karim Amer, Pedro Kos, Geralyn Dreyfous, Judy Korin. Intérpretes: Brittany Kaiser, Carole Cadwalladr, David Carrol e outros. Roteiro: Karim Amer, Pedro Kos, Erin Barnett. Estados Unidos: Netflix, 2019 (113 min). Produzido por Netflix. Disponível em: https://www.netflix.com Acesso em: 4 ago. 2019.

PUPO, Amanda; PIRES, Breno. TSE abre ação sobre compra de mensagens anti-PT no WhatsApp. O Estado de São Paulo, 19 out. 2019. Disponível em: https://politica.estadao.com.br/noticias/eleicoes,tse-abre-acao-sobre-compra-de-mensagens-anti-pt-no-whatsapp,70002555142. Acesso em: 2 nov. 2019.

RAMAYANA, Marcos. Direito Eleitoral. 16. Edição. Niterói: Impetus, 2018. ISBN: 9788576269601.

ROSENBERG, Matthew; CONFESSORE, Nicholas; CADWALLADR, Carole. How Trump consultants exploited the Facebook data of millions. The New York Times, 17 mar. 2018. Disponível em: https://www.nytimes.com/2018/03/17/us/politics/cambridge-analytica-trump-campaign.html. Acesso em: 20 dez. 2018.

RUEDIGER, Marco A. O estado da desinformação: Eleições 2018. FGV DAPP, Rio de Janeiro, 18 set 2018. Disponível em http://hdl.handle.net/10438/25743. Acesso em: 3 jan. 2019.

RUEDIGER, Marco A.; GRASSI, Amaro. Redes sociais nas eleições 2018. FGV DAPP, Rio de Janeiro, 5 out 2018. Disponível em http://hdl.handle.net/10438/25737. Acesso em: 3 jan. 2019.

RUEDIGER, Marco A.; GRASSI, Amaro. Desinformação na era digital: amplificações e panorama das Eleições 2018. FGV DAPP, Rio de Janeiro, 30 nov 2018a. Disponível em http://hdl.handle.net/10438/25742. Acesso em: 3 jan. 2019.

RUEDIGER, Marco A.; GRASSI, Amaro. Bots e o Direito Eleitoral Brasileiro nas Eleições de 2018. FGV DAPP, Rio de Janeiro, 15 jan 2019. Disponível em http://hdl.handle.net/10438/26227. Acesso em 17 fev. 2019.

SHALDERS, André. Eleições 2018 estreia na TV com força em cheque. BBC News Brasil, 18 ago. 2018. Disponível em: https://www.bbc.com/portuguese/brasil-45364384 Acesso em: 18 ago. 2019.

SILVA, Carla A. et al. Uso de redes sociais e softwares para disseminação e combate de fake news nas eleições. In: CONGRESSO NACIONAL UNIVERSIDADE, EAD E SOFTWARE LIVRE, 2018, Belo Horizonte. Anais […]. Belo Horizonte: UFMG, 2018. Disponível em: http://www.periodicos.letras.ufmg.br/index.php/ueadsl/article/view/14413. Acesso em: 4 jan. 2019.

STELLA, Massimo; FERRARA, Emilio; DOMENICO, Manlio. Bots increase exposure to negative and inflammatory content in online social systems. Proceedings of the National Academy of Sciences of the United States of America, v. 115, p. 12435–12440, dez. 2018. DOI: 10.1073/pnas.1803470115. Disponível em: https://www.pnas.org/content/115/49/12435. Acesso em: 8 jan. 2019.

SUNSTEIN, Robert C. #Republic: Divided democracy in the age of social media. Princeton University Press, 2017. ISBN: 9780691175515.

TÖRNBERG, Petter. Echo chambers and viral misinformation: Modeling fake news as complex contagion. PLoS One, 20 set. 2018. DOI: 10.1371/journal.pone.0203958. Disponível em: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0203958. Acesso em: 13 jan. 2019.

TWITTER vai banir todo tipo de propaganda política, o que pressiona Facebook. O Globo, 30 out. 2019. Disponível em: https://oglobo.globo.com/mundo/twitter-vai-banir-todo-tipo-de-propaganda-politica-que-pressiona-facebook-24051553. Acesso em: 31 out. 2019.

VELLOSO, Carlos M. D. S.; AGRA, Walber D. M. Elementos de direito eleitoral. 5. ed. São Paulo: Saraiva, 2016. ISBN: 9788547208073.

APPENDIX – FOOTNOTE REFERENCES

3. A social bot is a computer algorithm that automatically produces content and interacts with humans on social media, trying to mimic and possibly alter their behavior. (FERRARA, 2016). 4. Echo chambers or Echo Chambers are environments in which a person finds only beliefs or opinions that coincide with their own, so that their existing views are reinforced and alternative ideas are not considered (CAMBRIDGE, 2019) 5. This item does not exist in the Brazilian version of the Facebook page. 6. The UK’s withdrawal from the European Union 7. Center for applied social research that has the mission of promoting innovation for public policies. 8. Available in: http://hdl.handle.net/10438/18695. Accessed on: 12 Jan. 2019.

9. Created in December 2017, to discuss the potential impact of fake news and the use of robots during the 2018 Brazilian elections. (RUEDIGER; GRASSI, 2018, p.4)

10. The Room also has a group of Digital Democracy Observers who follow the analyses generated. This group includes researchers from the Department of Communication Studies/Northwestern University, the Center for Studies on Medios Y Sociedad in Argentina, the Universidad San Andrés, José Maurício Domingues – Institute of Social and Political Studies/State University of Rio de Janeiro, the School of Communications and Art/USP, Luciano Floridi – Oxford Internet Institute/Oxford, University, and University of Brasilia. (RUEDIGER; GRASSI, out 2018, p.5)

11. “The hotsite is a smaller page or site created for specific situations and for a certain period of time.” (BECKER, 2016)

12. Available in http://hdl.handle.net/10438/25742. Accessed on: 3 Jan. 2019.

13. Available in https://www1.folha.uol.com.br/poder/2019/08/tse-ouve-testemunha-em-acao-sobre-mensagens-pro-bolsonaro-no-whatsapp.shtml. Accessed on: 22 Aug. 2019

14. Available in http://hdl.handle.net/10438/25742. Accessed on: 3 Jan. 2019.

15. Art. 41a, caput of Law No. 9,504/97 (Elections Law): Subject to the provisions of art. 26 and its incises, constitutes capture of suffrage, prohibited by this Law, the candidate donate, offer, promise, or deliver, to the voter, in order to obtain him the vote, good or personal advantage of any nature, including employment or public function, from the registration of the candidacy until the day of the election, including, under penalty of fine of one thousand to fifty thousand Ufir , and the cancellation of the registration or diploma, subject to the procedure provided for in art. 22 of Complementary Law No. 64 of May 18, 1990.

Art. 299, caput of Law No. 4,737/1965 (Electoral Code): Dar, offer, promise, request or receive, for themselves or others, money, donation, or any other advantage, to obtain or vote and to achieve or promise abstention, even if the offer is not accepted: Penalty – imprisonment up to four years and payment of five to fifteen days-fine.

16. The TSE youtube channel can be found at: www.youtube.com/tse

17. The Series can be found at: https://www.youtube.com/watch?v=1fSmN6BQvt0&list=PLljYw1P54c4zazS2rQVEneIyvTlSh27bo

18. Art. 326-A plus art. 2nd of Law No. 13,834/2019

19. http://www.justicaeleitoral.jus.br/desinformacao/#

20. http://www.justicaeleitoral.jus.br/desinformacao/arquivos/livro-fake%20news-miolo-web.pdf

[1] Technologist in Technology of Analysis and Development of Systems by Unifran; Graduated in Law.

[2] PhD in Environmental Sciences. Master’s degree in Environmental Engineering. Specialization in Post-Graduation Lato sensu in Tax Law. Law degree.

Sent: January, 2020.

Approved: March, 2020.